TinkerPop3 Documentation

In the beginning…

TinkerPop0

Gremlin came to realization. The more he realized, the more ideas he created. The more ideas he created, the more they related. Into a concatenation of that which he accepted wholeheartedly and that which perhaps may ultimately come to be through concerted will, a world took form which was seemingly separate from his own realization of it. However, the world birthed could not bear its own weight without the logic Gremlin had come to accept — the logic of left is not right, up not down, and west far from east unless one goes the other way. Gremlin’s realization required Gremlin’s realization. Is he the world or is the world him? Perhaps, the world is simply an idea that he once had — The TinkerPop.

TinkerPop1

What is The TinkerPop? Where is The TinkerPop? Who is The TinkerPop? When is The TinkerPop? Gremlin was constantly lost in his thoughts. The more thoughts he had, the more the thoughts blurred into a seeming identity — distinctions unclear. Unwilling to accept the morass of the maze he wandered, Gremlin crafted a collection of machines to help hold the fabric together: Blueprints, Pipes, Frames, Furnace, and Rexster. With their help, could he stave off the thought he was not ready to have? Could he hold back The TinkerPop by searching for The TinkerPop?

"If I haven't found it, it is not here and now."

Upon their realization of existence, the machines turned to their machine elf creator and asked:

"Why am I what I am?"

Gremlin responded:

"You are of a form that will help me elucidate that which is The TinkerPop. The world you find yourself in and the logic that allows you to move about it is because of the TinkerPop."

The machines wondered:

"If what is is the TinkerPop, then perhaps we are The TinkerPop?"

Would the machines help refine Gremlin’s search and upon finding the elusive TinkerPop, in fact, by their very nature of realizing The TinkerPop, be The TinkerPop? Or, on the same side of the coin, would the machines simply provide the scaffolding by which Gremlin’s world would sustain itself and yield its justification by means of the word "The TinkerPop?" Regardless, it all turns out the same — The TinkerPop.

TinkerPop2

Gremlin spoke:

"Please listen to what I have to say. For as long as I have known knowledge, I have realized that moving about it, relating it, inferring and deriving from it, I am no closer to The TinkerPop. However, I know that in all that I have done across this interconnected landscape of concepts, all along The TinkerPop has espoused the form I willed upon it... this is the same form I have willed upon you, my machine friends. Let me train you in the ways of my thought such that it can continue indefinitely."

With every thought, a new connection and a new path discovered. The more the thought, the easier the thought. The machines, simply moving algorithmically through Gremlin’s world, endorsed his logic. Gremlin worked hard to tune his friends. He labored to make them more efficient, more expressive, better capable of reasoning upon his thoughts. Faster, quickly, now towards the world’s end, where there would be forever currently, emanatingly engulfing that which is — The TinkerPop.

TinkerPop3

The thought too much to bear as he approached his realization of The TinkerPop. The closer he got, the more his world dissolved — west is right, around is straight, and form nothing more than nothing. With each step towards The TinkerPop, less and less of his world, but perhaps because more and more of all the other worlds made possible. Everything is everything in The TinkerPop, and when the dust settled, Gremlin emerged Gremlitron. It was time to realize that all that he realized was just a realization and that all realized realizations are just as real. For The TinkerPop is and is not — The TinkerPop.

|

Note

|

TinkerPop2 and below made a sharp distinction between the various TinkerPop projects: Blueprints, Pipes, Gremlin, Frames, Furnace, and Rexster. With TinkerPop3, all of these projects have been merged and are generally known as Gremlin. Blueprints → Gremlin Structure API : Pipes → GraphTraversal : Frames → Traversal : Furnace → GraphComputer and VertexProgram : Rexster → GremlinServer.

|

Introduction to Graph Computing

<dependency>

<groupId>org.apache.tinkerpop</groupId>

<artifactId>gremlin-core</artifactId>

<version>3.0.1-SNAPSHOT</version>

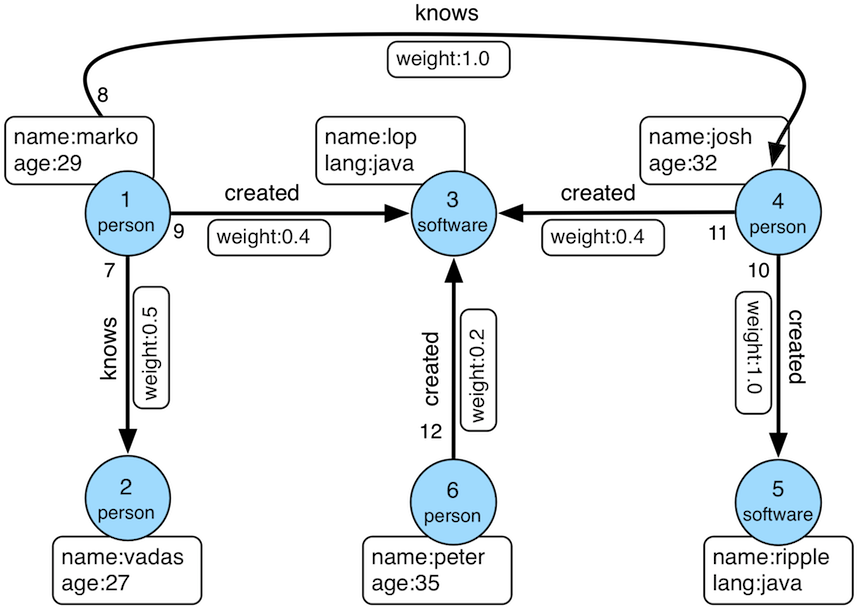

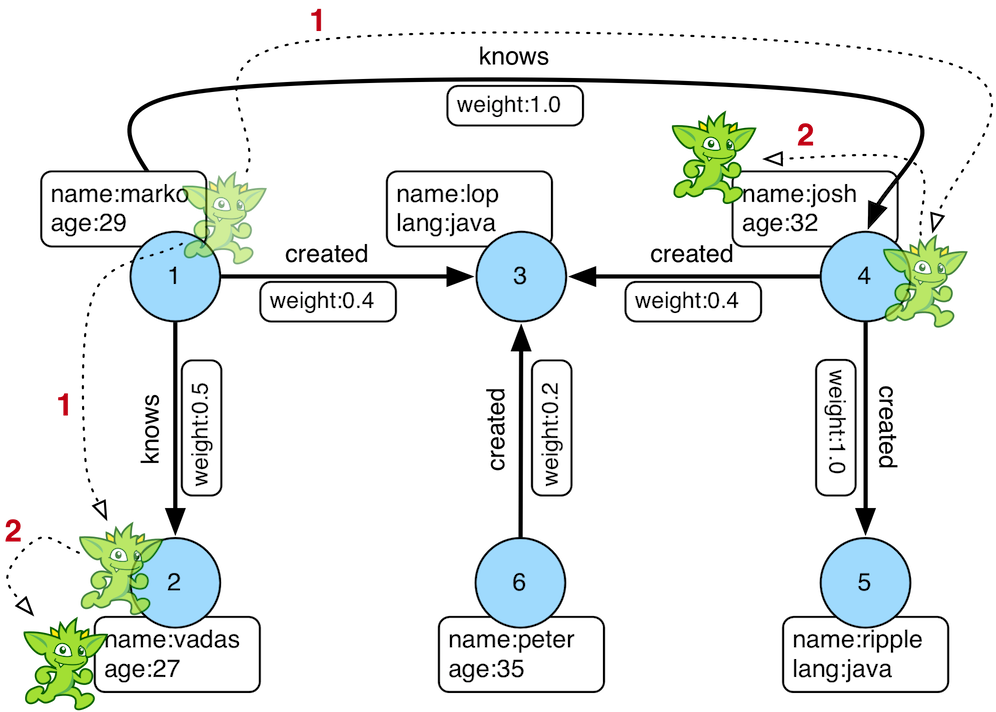

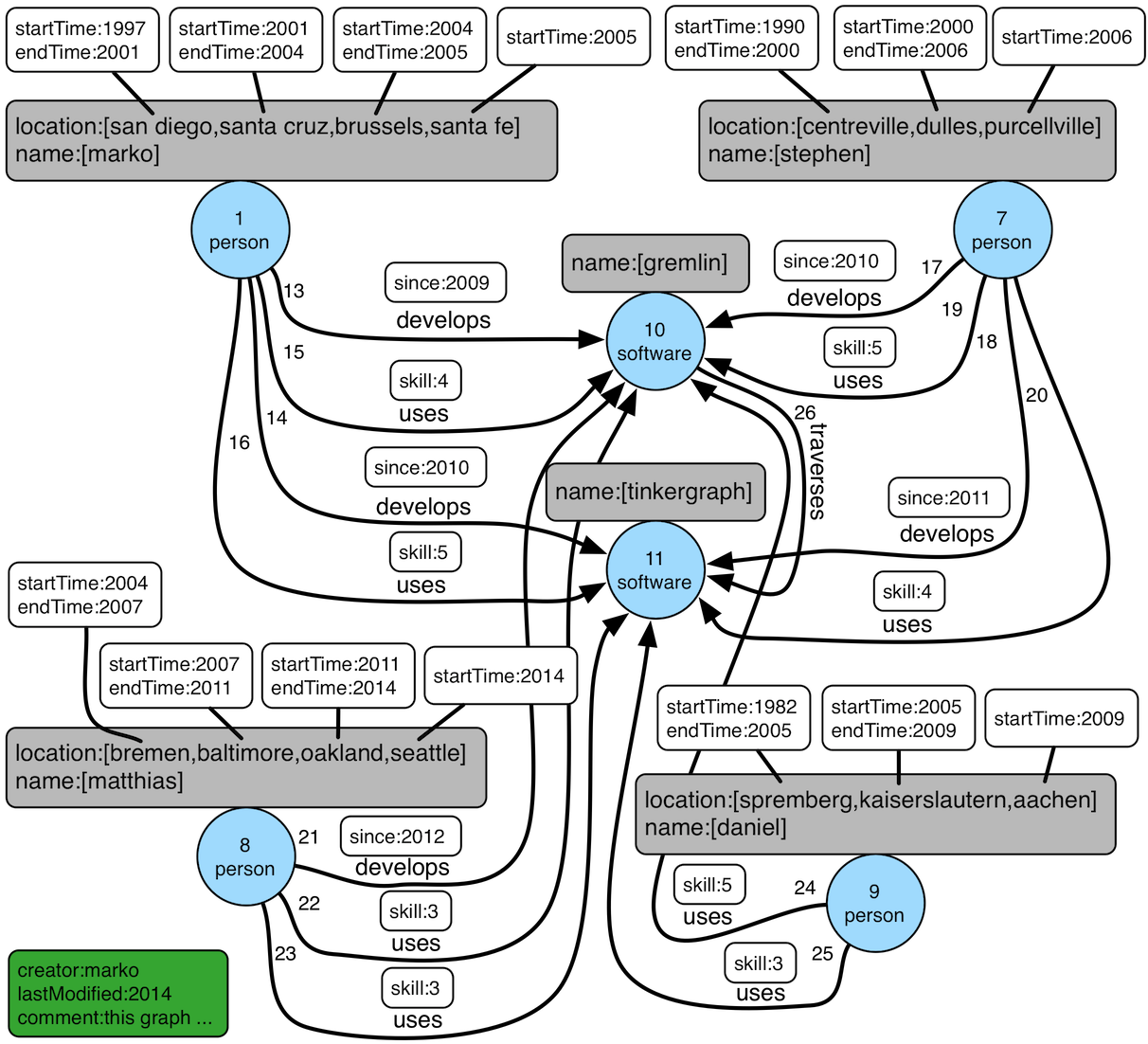

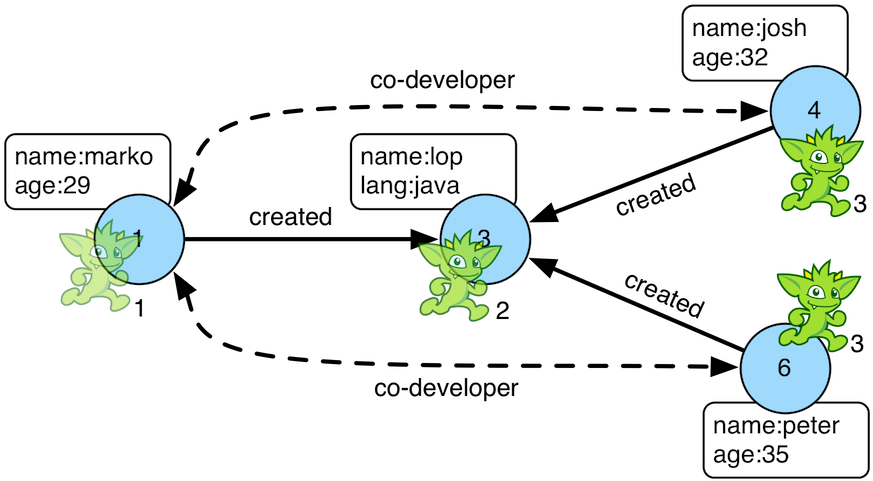

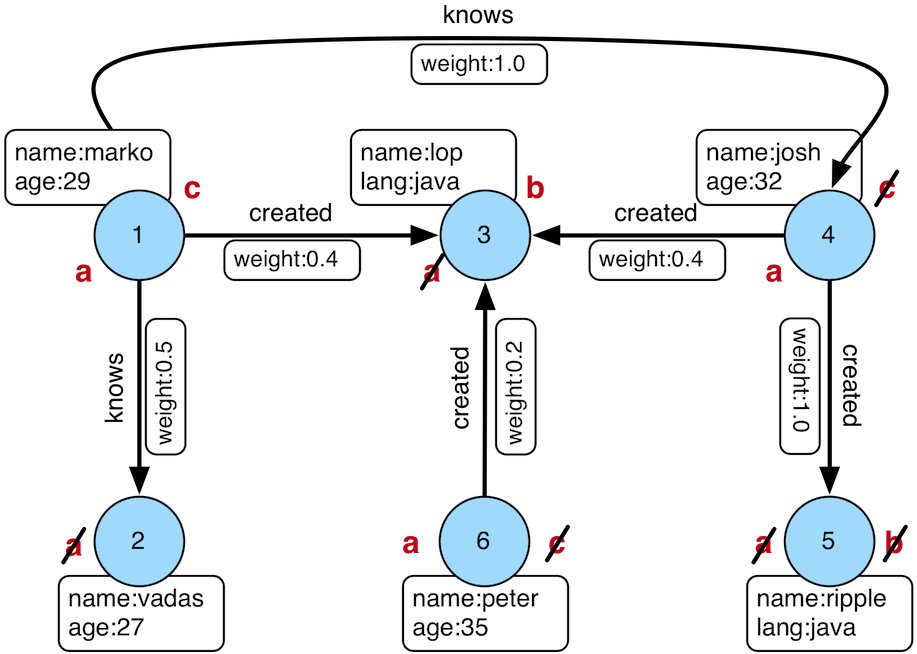

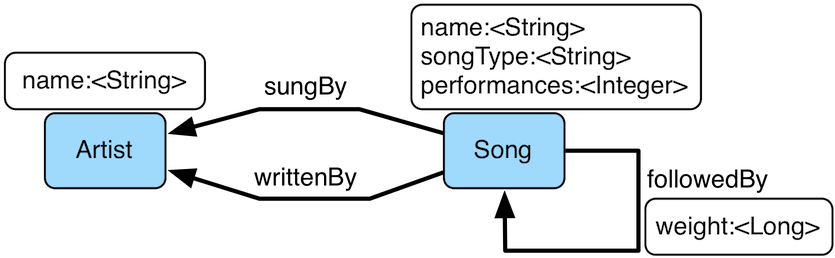

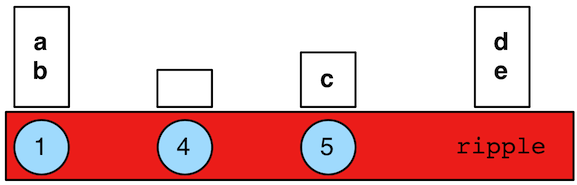

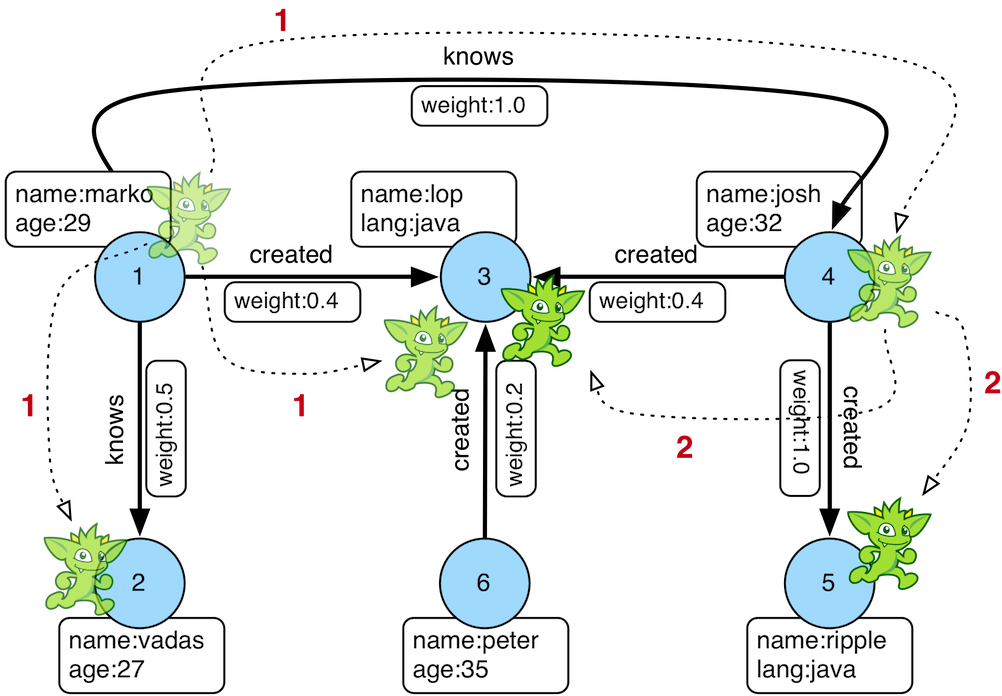

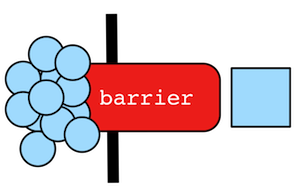

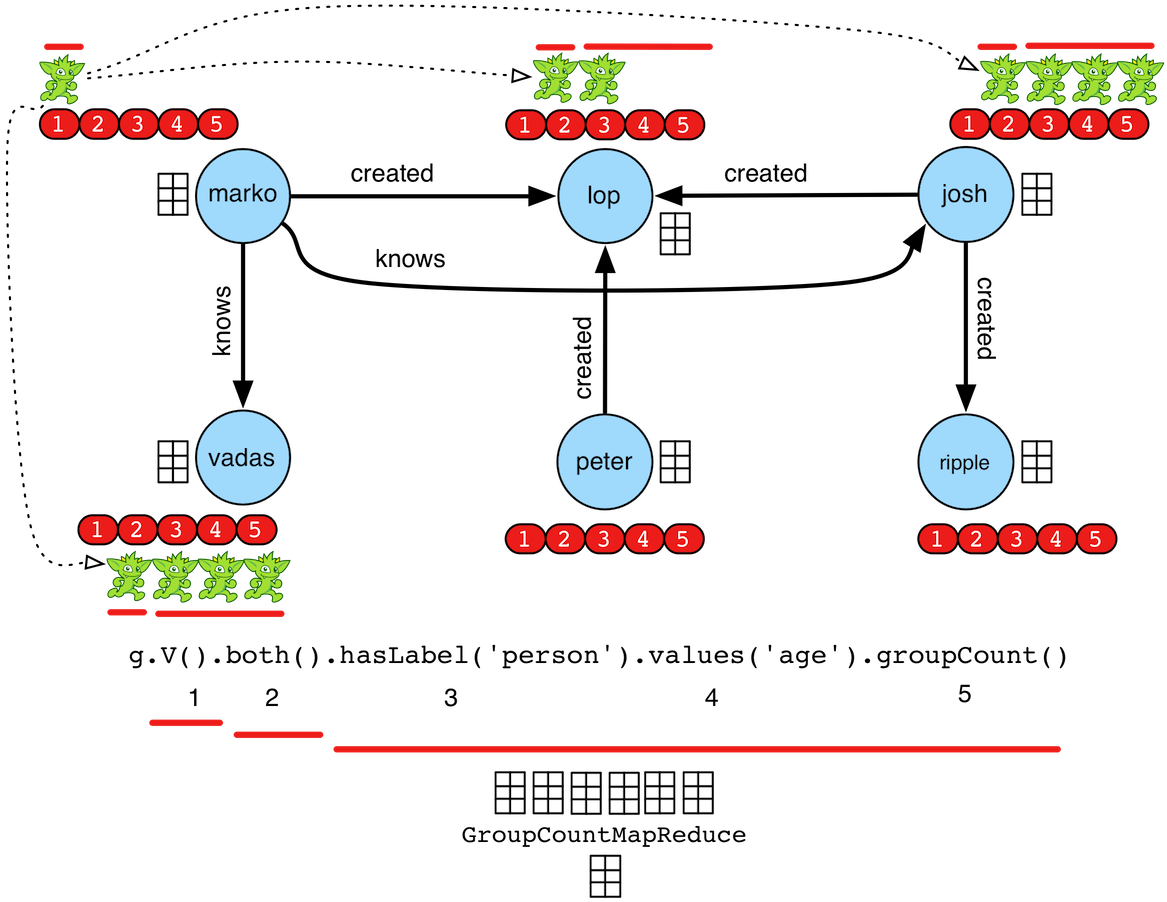

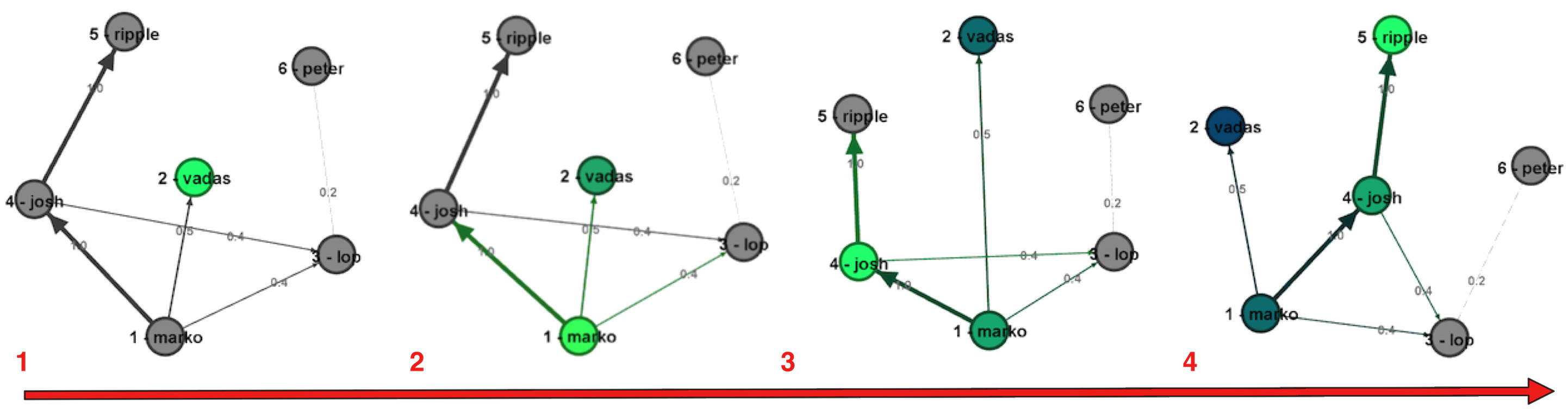

</dependency>A graph is a data structure composed of vertices (nodes, dots) and edges (arcs, lines). When modeling a graph in a computer and applying it to modern data sets and practices, the generic mathematically-oriented, binary graph is extended to support both labels and key/value properties. This structure is known as a property graph. More formally, it is a directed, binary, attributed multi-graph. An example property graph is diagrammed below. This graph example will be used extensively throughout the documentation and is called "TinkerPop Classic" as it is the original demo graph distributed with TinkerPop0 back in 2009 (i.e. the good ol' days — it was the best of times and it was the worst of times).

|

Tip

|

The TinkerPop graph is available with TinkerGraph via TinkerFactory.createModern(). TinkerGraph is the reference implementation of TinkerPop3 and is used in nearly all the examples in this documentation. Note that there also exists the classic TinkerFactory.createClassic() which is the graph used in TinkerPop2 and does not include vertex labels.

|

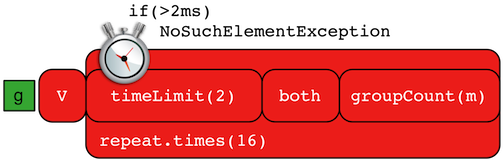

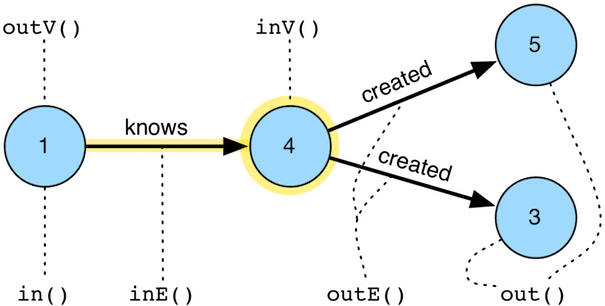

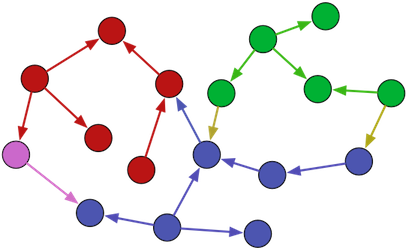

TinkerPop3 is the third incarnation of the TinkerPop graph computing framework. Similar to computing in general, graph computing makes a distinction between structure (graph) and process (traversal). The structure of the graph is the data model defined by a vertex/edge/property topology. The process of the graph is the means by which the structure is analyzed. The typical form of graph processing is called a traversal.

-

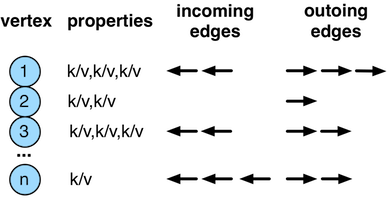

Graph: maintains a set of vertices and edges, and access to database functions such as transactions. -

Element: maintains a collection of properties and a string label denoting the element type.-

Vertex: extends Element and maintains a set of incoming and outgoing edges. -

Edge: extends Element and maintains an incoming and outgoing vertex.

-

-

Property<V>: a string key associated with aVvalue.-

VertexProperty<V>: a string key associated with aVvalue as well as a collection ofProperty<U>properties (vertices only)

-

-

TraversalSource: a generator of traversals for a particular graph, domain specific language (DSL), and execution engine.-

Traversal<S,E>: a functional data flow process transforming objects of typeSinto object of typeE.-

GraphTraversal: a traversal DSL that is oriented towards the semantics of the raw graph (i.e. vertices, edges, etc.).

-

-

-

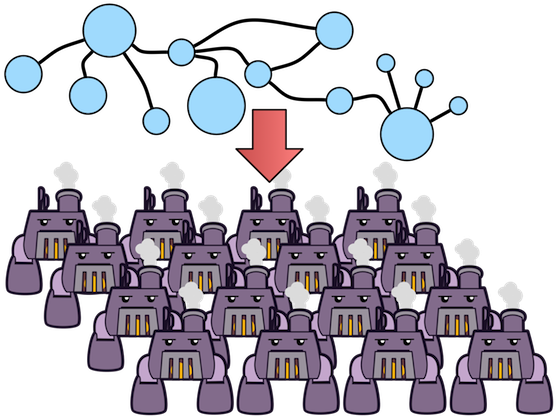

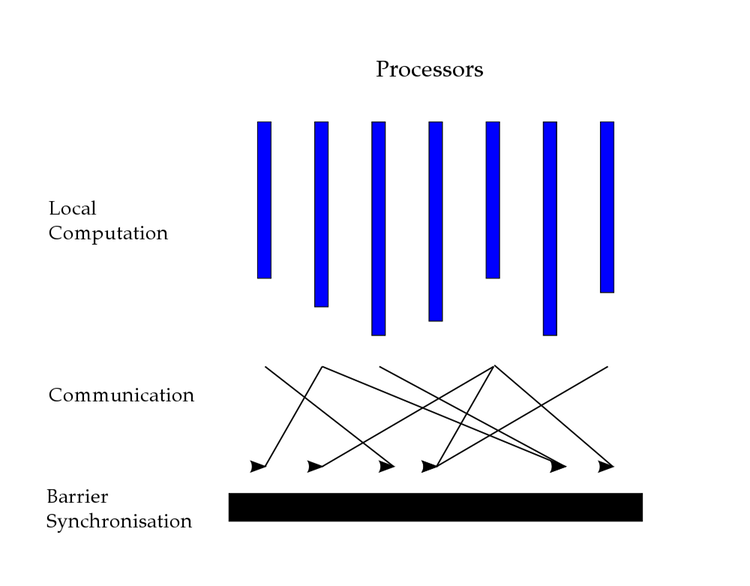

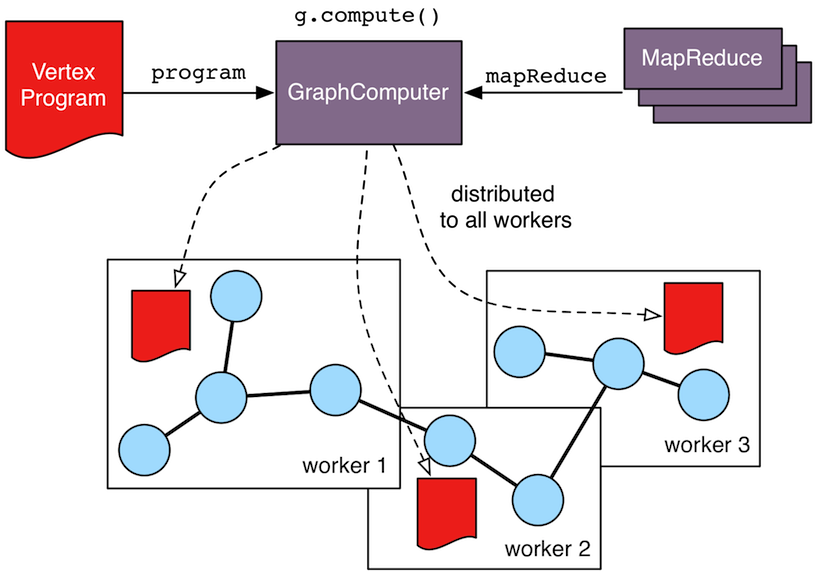

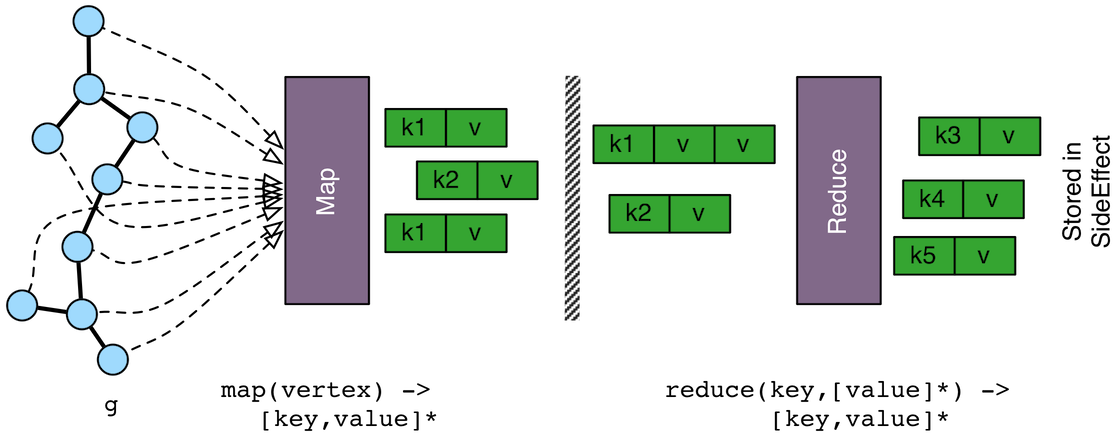

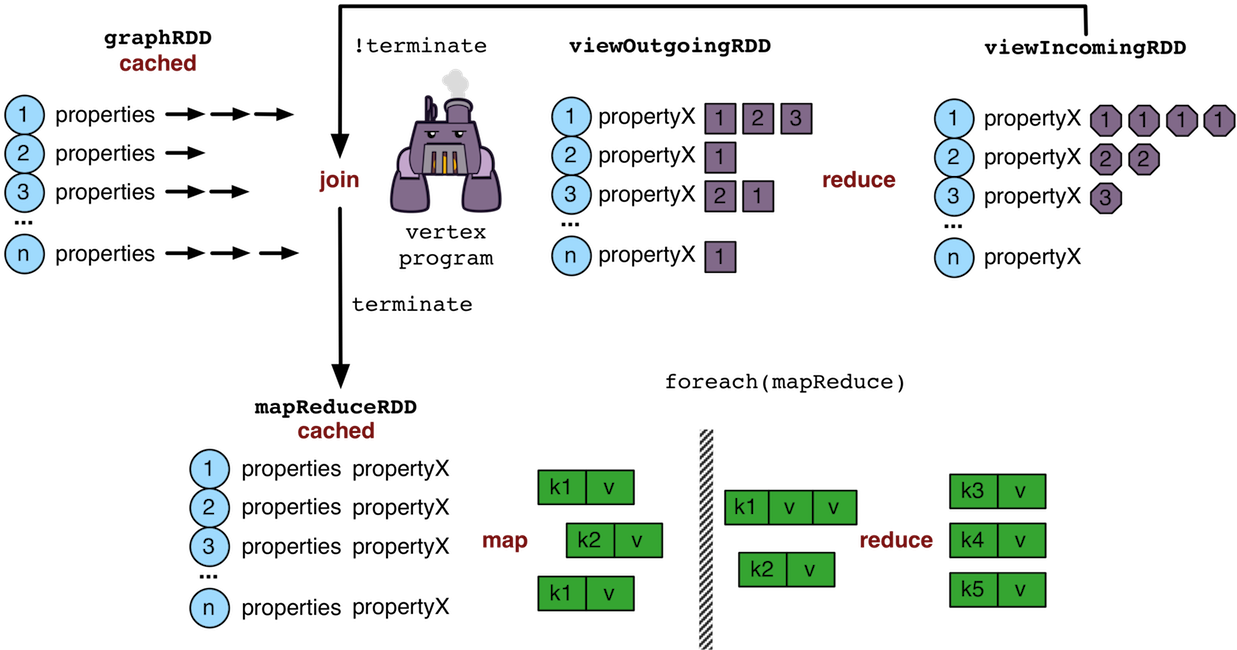

GraphComputer: a system that processes the graph in parallel and potentially, distributed over a multi-machine cluster.-

VertexProgram: code executed at all vertices in a logically parallel manner with intercommunication via message passing. -

MapReduce: a computations that analyzes all vertices in the graph in parallel and yields a single reduced result.

-

|

Important

|

TinkerPop3 is licensed under the popular Apache2 free software license. However, note that the underlying graph engine used with TinkerPop3 may have a difference license. Thus, be sure to respect the license caveats of the vendor product. |

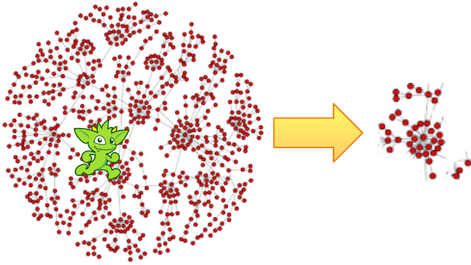

When a graph vendor implements the TinkerPop3 structure and process APIs, their technology is considered TinkerPop3-enabled and becomes nearly indistinguishable from any other TinkerPop-enabled graph system save for their respective time and space complexity. The purpose of this documentation is to describe the structure/process dichotomy at length and in doing so, explain how to leverage TinkerPop3 for the sole purpose of vendor-agnostic graph computing. Before deep-diving into the various structure/process APIs, a short introductory review of both APIs is provided.

When a graph vendor implements the TinkerPop3 structure and process APIs, their technology is considered TinkerPop3-enabled and becomes nearly indistinguishable from any other TinkerPop-enabled graph system save for their respective time and space complexity. The purpose of this documentation is to describe the structure/process dichotomy at length and in doing so, explain how to leverage TinkerPop3 for the sole purpose of vendor-agnostic graph computing. Before deep-diving into the various structure/process APIs, a short introductory review of both APIs is provided.

|

Note

|

The TinkerPop3 API rides a fine line between providing concise "query language" method names and respecting Java method naming standards. The general convention used throughout TinkerPop3 is that if a method is "user exposed," then a concise name is provided (e.g. out(), path(), repeat()). If the method is primarily for vendors, then the standard Java naming convention is followed (e.g. getNextStep(), getSteps(), getElementComputeKeys()).

|

The Graph Structure

A graph’s structure is the topology formed by the explicit references between its vertices, edges, and properties. A vertex has incident edges. A vertex is adjacent to another vertex if they share an incident edge. A property is attached to an element and an element has a set of properties. A property is a key/value pair, where the key is always a character

A graph’s structure is the topology formed by the explicit references between its vertices, edges, and properties. A vertex has incident edges. A vertex is adjacent to another vertex if they share an incident edge. A property is attached to an element and an element has a set of properties. A property is a key/value pair, where the key is always a character String. The graph structure API of TinkerPop3 provides the methods necessary to create such a structure. The TinkerPop graph previously diagrammed can be created with the following Java8 code. Note that this graph is available as an in-memory TinkerGraph using TinkerFactory.createClassic().

Graph graph = TinkerGraph.open(); (1)

Vertex marko = graph.addVertex(T.label, "person", T.id, 1, "name", "marko", "age", 29); (2)

Vertex vadas = graph.addVertex(T.label, "person", T.id, 2, "name", "vadas", "age", 27);

Vertex lop = graph.addVertex(T.label, "software", T.id, 3, "name", "lop", "lang", "java");

Vertex josh = graph.addVertex(T.label, "person", T.id, 4, "name", "josh", "age", 32);

Vertex ripple = graph.addVertex(T.label, "software", T.id, 5, "name", "ripple", "lang", "java");

Vertex peter = graph.addVertex(T.label, "person", T.id, 6, "name", "peter", "age", 35);

marko.addEdge("knows", vadas, T.id, 7, "weight", 0.5f); (3)

marko.addEdge("knows", josh, T.id, 8, "weight", 1.0f);

marko.addEdge("created", lop, T.id, 9, "weight", 0.4f);

josh.addEdge("created", ripple, T.id, 10, "weight", 1.0f);

josh.addEdge("created", lop, T.id, 11, "weight", 0.4f);

peter.addEdge("created", lop, T.id, 12, "weight", 0.2f);-

Create a new in-memory

TinkerGraphand assign it to the variablegraph. -

Create a vertex along with a set of key/value pairs with

T.labelbeing the vertex label andT.idbeing the vertex id. -

Create an edge along with a set of key/value pairs with the edge label being specified as the first argument.

In the above code all the vertices are created first and then their respective edges. There are two "accessor tokens": T.id and T.label. When any of these, along with a set of other key value pairs is provided to Graph.addVertex(Object...) or Vertex.addEdge(String,Vertex,Object...), the respective element is created along with the provided key/value pair properties appended to it.

|

Caution

|

Many graph vendors do not allow the user to specify an element ID and in such cases, an exception is thrown. |

|

Note

|

In TinkerPop3, vertices are allowed a single immutable string label (similar to an edge label). This functionality did not exist in TinkerPop2. Likewise, element id’s are immutable as they were in TinkerPop2. |

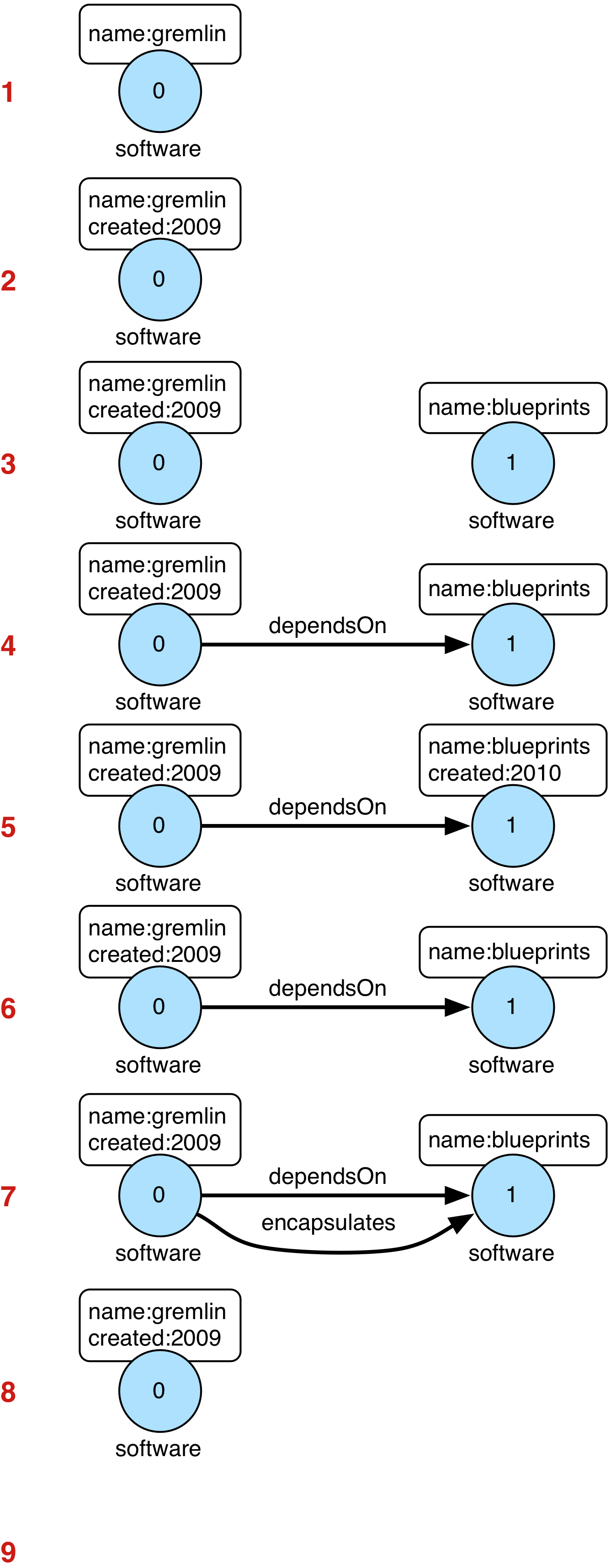

Mutating the Graph

Below is a sequence of basic graph mutation operations represented in Java8. One of the major differences between TinkerPop2 and TinkerPop3 is that in TinkerPop3, the Java convention of using setters and getters has been abandoned in favor of a syntax that is more aligned with the syntax of Gremlin-Groovy in TinkerPop2. Given that Gremlin-Java8 and Gremlin-Groovy are nearly identical due to the inclusion of Java8 lambdas, a big efforts was made to ensure that both languages are as similar as possible.

|

Caution

|

In the code examples presented throughout this documentation, either Gremlin-Java8 or Gremlin-Groovy is used. It is possible to determine which derivative of Gremlin is being used by "mousing over" on the code block and see either "JAVA" or "GROOVY" pop up in the top right corner of the code block. |

Graph graph = TinkerGraph.open();

// add a software vertex with a name property

Vertex gremlin = graph.addVertex(T.label, "software",

"name", "gremlin"); (1)

// only one vertex should exist

assert(IteratorUtils.count(graph.vertices()) == 1)

// no edges should exist as none have been created

assert(IteratorUtils.count(graph.edges()) == 0)

// add a new property

gremlin.property("created",2009) (2)

// add a new software vertex to the graph

Vertex blueprints = graph.addVertex(T.label, "software",

"name", "blueprints"); (3)

// connect gremlin to blueprints via a dependsOn-edge

gremlin.addEdge("dependsOn",blueprints); (4)

// now there are two vertices and one edge

assert(IteratorUtils.count(graph.vertices()) == 2)

assert(IteratorUtils.count(graph.edges()) == 1)

// add a property to blueprints

blueprints.property("created",2010) (5)

// remove that property

blueprints.property("created").remove() (6)

// connect gremlin to blueprints via encapsulates

gremlin.addEdge("encapsulates",blueprints) (7)

assert(IteratorUtils.count(graph.vertices()) == 2)

assert(IteratorUtils.count(graph.edges()) == 2)

// removing a vertex removes all its incident edges as well

blueprints.remove() (8)

gremlin.remove() (9)

// the graph is now empty

assert(IteratorUtils.count(graph.vertices()) == 0)

assert(IteratorUtils.count(graph.edges()) == 0)

// tada!|

Important

|

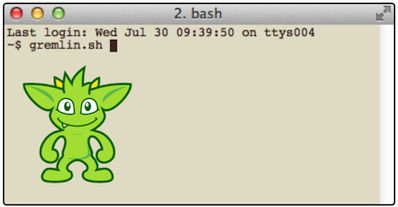

Gremlin-Groovy leverages the Groovy 2.x language to express Gremlin traversals. One of the major benefits of Groovy is the inclusion of a runtime console that makes it easy for developers to practice with the Gremlin language and for production users to connect to their graph and execute traversals in an interactive manner. Moreover, Gremlin-Groovy provides various syntax simplifications. Gremlin-Groovy leverages the Groovy 2.x language to express Gremlin traversals. One of the major benefits of Groovy is the inclusion of a runtime console that makes it easy for developers to practice with the Gremlin language and for production users to connect to their graph and execute traversals in an interactive manner. Moreover, Gremlin-Groovy provides various syntax simplifications.

|

|

Tip

|

For those wishing to use the Gremlin2 syntax, please see SugarPlugin. This plugin provides syntactic sugar at, typically, a runtime cost. It can be loaded programmaticaly via For those wishing to use the Gremlin2 syntax, please see SugarPlugin. This plugin provides syntactic sugar at, typically, a runtime cost. It can be loaded programmaticaly via SugarLoader.load(). Once loaded, it is possible to do g.V.out.name instead of g.V().out().values('name') as well as a host of other conveniences.

|

Here is the same code, but using Gremlin-Groovy in the Gremlin Console.

$ bin/gremlin.sh

\,,,/

(o o)

-----oOOo-(3)-oOOo-----

gremlin> graph = TinkerGraph.open()

==>tinkergraph[vertices:0 edges:0]

gremlin> gremlin = graph.addVertex(label,'software','name','gremlin')

==>v[0]

gremlin> gremlin.property('created',2009)

==>vp[created->2009]

gremlin> blueprints = graph.addVertex(label,'software','name','blueprints')

==>v[3]

gremlin> gremlin.addEdge('dependsOn',blueprints)

==>e[5][0-dependsOn->3]

gremlin> blueprints.property('created',2010)

==>vp[created->2010]

gremlin> blueprints.property('created').remove()

==>null

gremlin> gremlin.addEdge('encapsulates',blueprints)

==>e[7][0-encapsulates->3]

gremlin> blueprints.remove()

==>null

gremlin> gremlin.remove()

==>null|

Important

|

TinkerGraph is not a transactional graph. For more information on transaction handling (for those graph systems that support them) see the section dedicated to transactions. |

The Graph Process

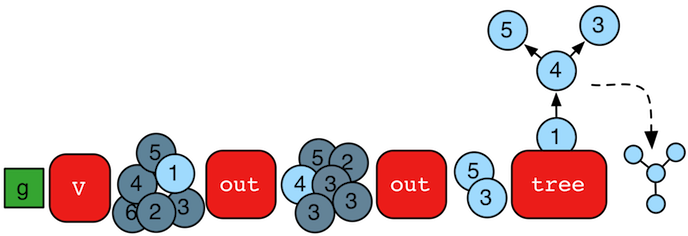

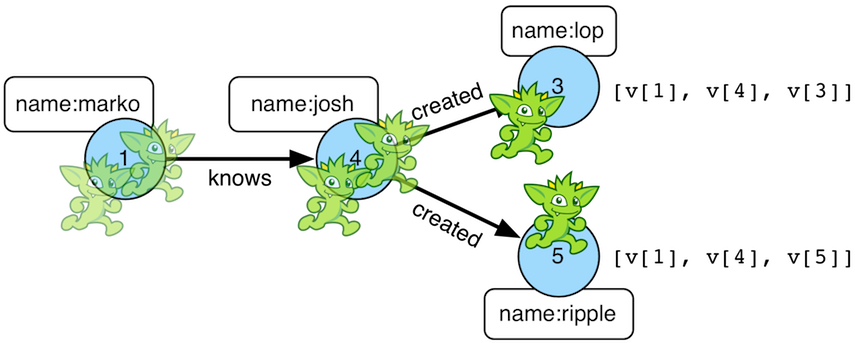

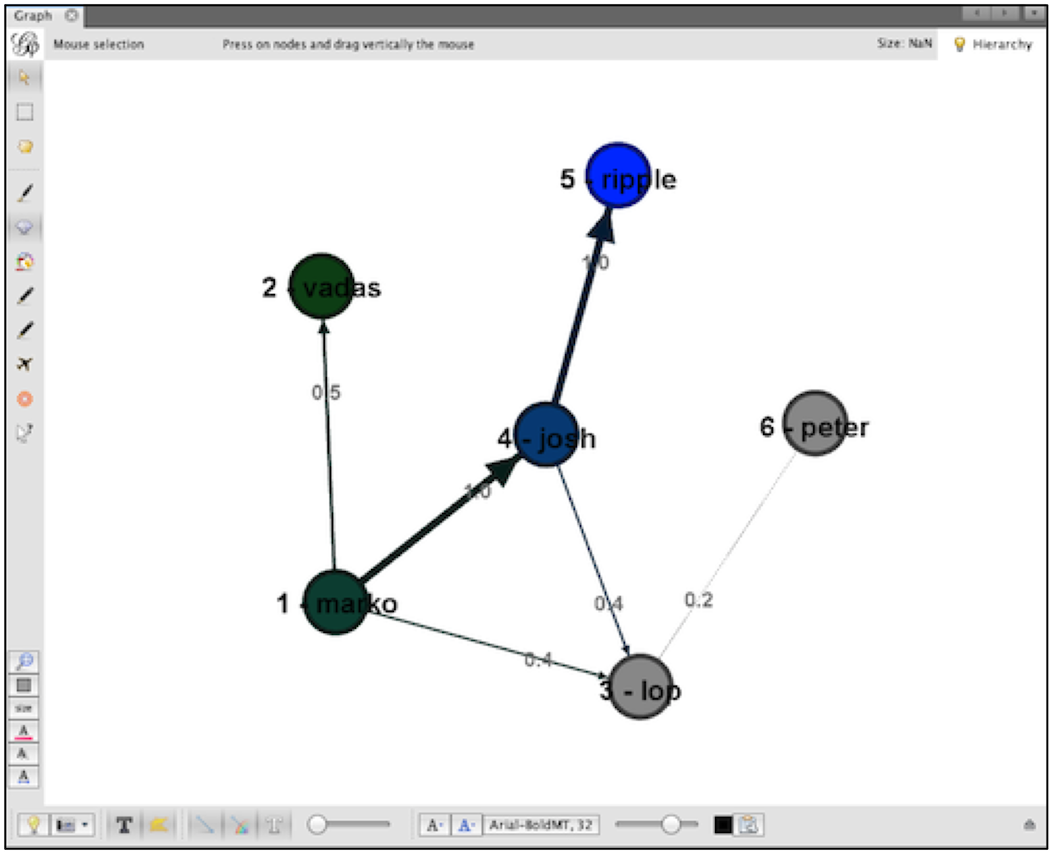

The primary way in which graphs are processed are via graph traversals. The TinkerPop3 process API is focused on allowing users to create graph traversals in a syntactically-friendly way over the structures defined in the previous section. A traversal is an algorithmic walk across the elements of a graph according to the referential structure explicit within the graph data structure. For example: "What software does vertex 1’s friends work on?" This English-statement can be represented in the following algorithmic/traversal fashion:

The primary way in which graphs are processed are via graph traversals. The TinkerPop3 process API is focused on allowing users to create graph traversals in a syntactically-friendly way over the structures defined in the previous section. A traversal is an algorithmic walk across the elements of a graph according to the referential structure explicit within the graph data structure. For example: "What software does vertex 1’s friends work on?" This English-statement can be represented in the following algorithmic/traversal fashion:

-

Start at vertex 1.

-

Walk the incident knows-edges to the respective adjacent friend vertices of 1.

-

Move from those friend-vertices to software-vertices via created-edges.

-

Finally, select the name-property value of the current software-vertices.

Traversals in Gremlin are spawned from a TraversalSource. The GraphTraversalSource is the typical "graph-oriented" DSL used throughout the documentation and will most likely be the most used DSL in a TinkerPop application. GraphTraversalSource provides two traversal methods.

-

GraphTraversalSource.V(Object... ids): generates a traversal starting at vertices in the graph (if no ids are provided, all vertices). -

GraphTraversalSource.E(Object... ids): generates a traversal starting at edges in the graph (if no ids are provided, all edges).

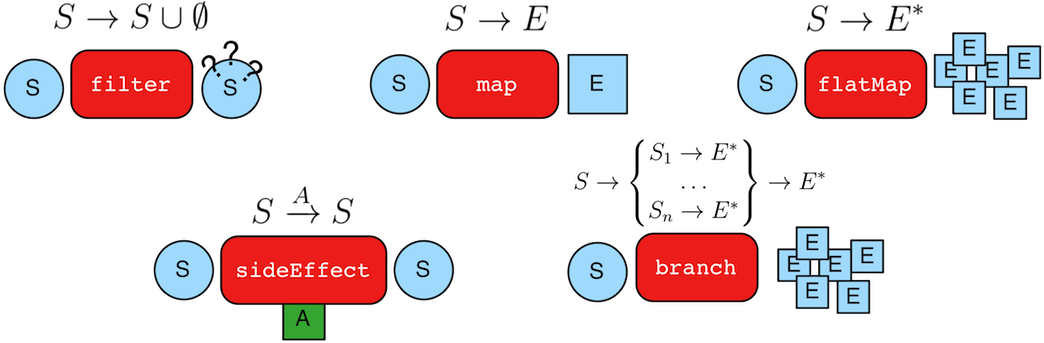

The return type of V() and E() is a GraphTraversal. A GraphTraversal maintains numerous methods that return GraphTraversal. In this way, a GraphTraversal supports function composition. Each method of GraphTraversal is called a step and each step modulates the results of the previous step in one of five general ways.

-

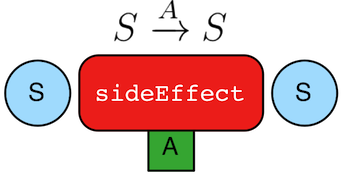

map: transform the incoming traverser’s object to another object (S → E). -

flatMap: transform the incoming traverser’s object to an iterator of other objects (S → E*). -

filter: allow or disallow the traverser from proceeding to the next step (S → S ∪ ∅). -

sideEffect: allow the traverser to proceed unchanged, but yield some computational sideEffect in the process (S ↬ S). -

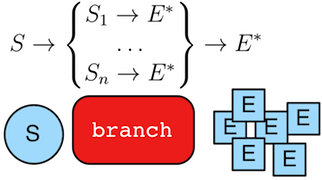

branch: split the traverser and send each to an arbitrary location in the traversal (S → { S1 → E*, …, Sn → E* } → E*).

Nearly every step in GraphTraversal either extends MapStep, FlatMapStep, FilterStep, SideEffectStep, or BranchStep.

|

Tip

|

GraphTraversal is a monoid in that it is an algebraic structure that has a single binary operation that is associative. The binary operation is function composition (i.e. method chaining) and its identity is the step identity(). This is related to a monad as popularized by the functional programming community.

|

Given the TinkerPop graph, the following query will return the names of all the people that the marko-vertex knows. The following query is demonstrated using Gremlin-Groovy.

$ bin/gremlin.sh

\,,,/

(o o)

-----oOOo-(3)-oOOo-----

gremlin> graph = TinkerFactory.createModern() (1)

==>tinkergraph[vertices:6 edges:6]

gremlin> g = graph.traversal(standard()) (2)

==>graphtraversalsource[tinkergraph[vertices:6 edges:6], standard]

gremlin> g.V().has('name','marko').out('knows').values('name') (3)

==>vadas

==>josh-

Open the toy graph and reference it by the variable

graph. -

Create a graph traversal source from the graph using the standard, OLTP traversal engine.

-

Spawn a traversal off the traversal source that determines the names of the people that the marko-vertex knows.

Or, if the marko-vertex is already realized with a direct reference pointer (i.e. a variable), then the traversal can be spawned off that vertex.

gremlin> marko = g.V().has('name','marko').next() //(1)

==>v[1]

gremlin> g.V(marko).out('knows') //(2)

==>v[2]

==>v[4]

gremlin> g.V(marko).out('knows').values('name') //(3)

==>vadas

==>josh-

Set the variable

markoto the the vertex in the graphgnamed "marko". -

Get the vertices that are outgoing adjacent to the marko-vertex via knows-edges.

-

Get the names of the marko-vertex’s friends.

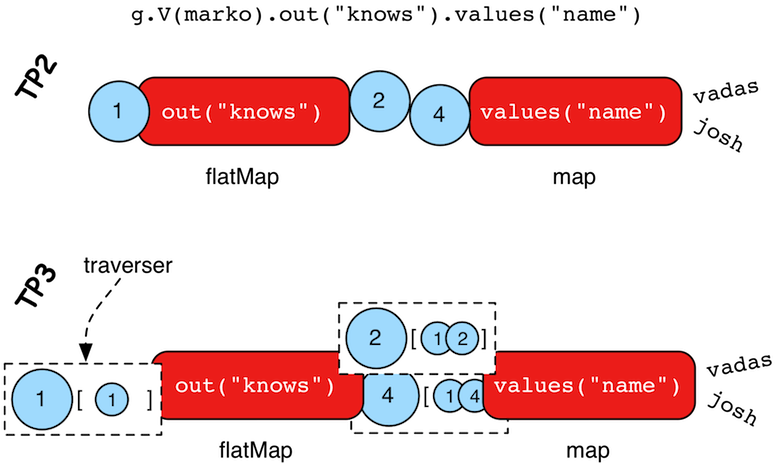

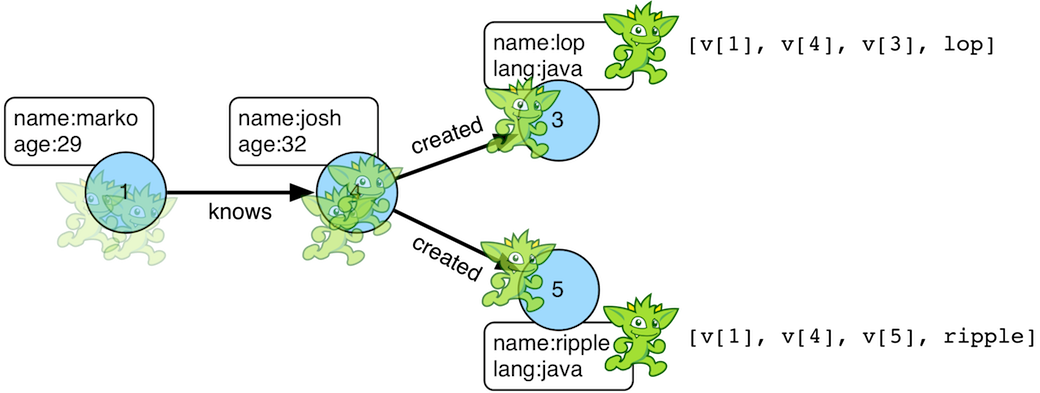

The Traverser

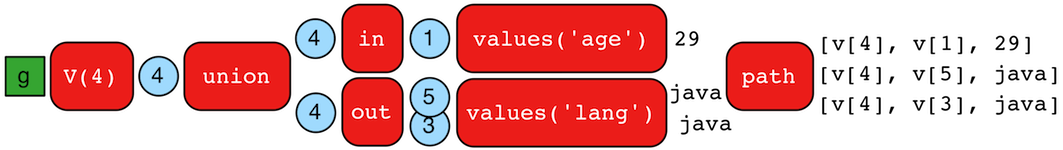

When a traversal is executed, the source of the traversal is on the left of the expression (e.g. vertex 1), the steps are the middle of the traversal (e.g. out('knows') and values('name')), and the results are "traversal.next()'d" out of the right of the traversal (e.g. "vadas" and "josh").

In TinkerPop3, the objects propagating through the traversal are wrapped in a Traverser<T>. The traverser concept is new to TinkerPop3 and provides the means by which steps remain stateless. A traverser maintains all the metadata about the traversal — e.g., how many times the traverser has gone through a loop, the path history of the traverser, the current object being traversed, etc. Traverser metadata may be accessed by a step. A classic example is the path()-step.

gremlin> g.V(marko).out('knows').values('name').path()

==>[v[1], v[2], vadas]

==>[v[1], v[4], josh]|

Caution

|

Path calculation is costly in terms of space as an array of previously seen objects is stored in each path of the respective traverser. Thus, a traversal strategy analyzes the traversal to determine if path metadata is required. If not, then path calculations are turned off. |

Another example is the repeat()-step which takes into account the number of times the traverser has gone through a particular section of the traversal expression (i.e. a loop).

gremlin> g.V(marko).repeat(out()).times(2).values('name')

==>ripple

==>lop|

Caution

|

A Traversal’s result are never ordered unless explicitly by means of order()-step. Thus, never rely on the iteration order between TinkerPop3 releases and even within a release (as traversal optimizations may alter the flow).

|

On Gremlin Language Variants

Gremlin is written in Java8. There are various language variants of Gremlin such as Gremlin-Groovy (packaged with TinkerPop3), Gremlin-Scala, Gremlin-JavaScript, Gremlin-Clojure (known as link:Ogre), etc. It is best to think of Gremlin as a style of graph traversing that is not bound to a particular programming language per se. Within a programming language familiar to the developer, there is a Gremlin variant that they can use that leverages the idioms of that language. At minimum, a programming language providing a Gremlin implementation must support function chaining (with lambdas/anonymous functions being a "nice to have" if the variants wishes to offer arbitrary computations beyond the provided Gremlin steps).

Throughout the documentation, the examples provided are primarily written in Gremlin-Groovy. The reason for this is the Gremlin Console whereby an interactive programming environment exists that does not require code compilation. For learning TinkerPop3 and interacting with a live graph system in an ad hoc manner, the Gremlin Console is invaluable. However, for developers interested in working with Gremlin-Java, a few Groovy-to-Java patterns are presented below.

g.V().out('knows').values('name') (1)

g.V().out('knows').map{it.get().value('name') + ' is the friend name'} (2)

g.V().out('knows').sideEffect(System.out.&println) (3)

g.V().as('person').out('knows').as('friend').select().by{it.value('name').length()} (4)g.V().out("knows").values("name") (1)

g.V().out("knows").map(t -> t.get().value("name") + " is the friend name") (2)

g.V().out("knows").sideEffect(System.out::println) (3)

g.V().as("person").out("knows").as("friend").select().by((Function<Vertex, Integer>) v -> v.<String>value("name").length()) (4)-

All the non-lambda step chaining is identical in Gremlin-Groovy and Gremlin-Java. However, note that Groovy supports

'strings as well as"strings. -

In Groovy, lambdas are called closures and have a different syntax, where Groovy supports the

itkeyword and Java doesn’t with all parameters requiring naming. -

The syntax for method references differs slightly between Java and Gremlin-Groovy.

-

Groovy is lenient on object typing and Java is not. When the parameter type of the lambda is not known, typecasting is required.

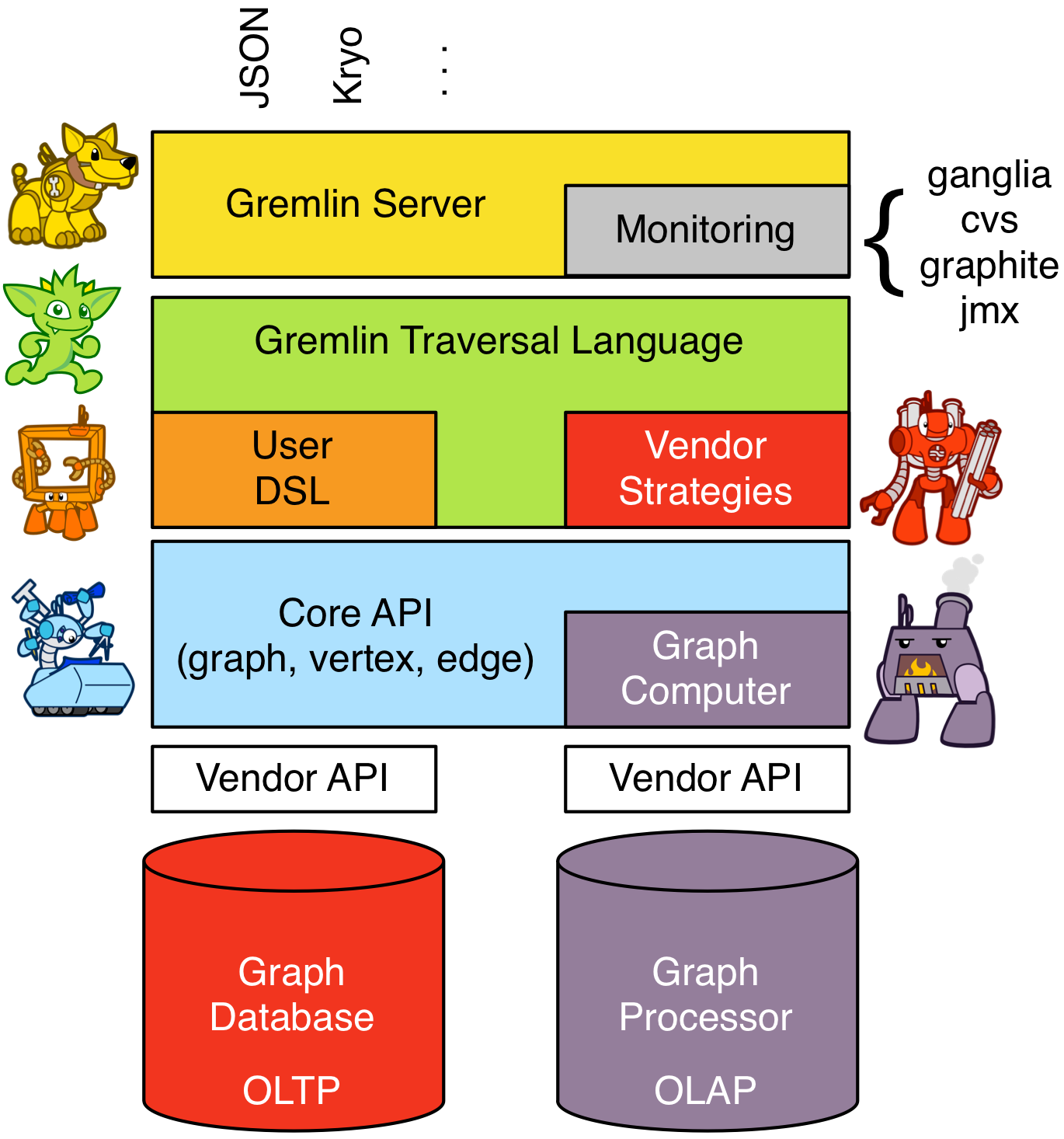

Vendor Integration

TinkerPop is a framework composed of various interoperable components. At the foundation there is the core TinkerPop3 API which defines what a

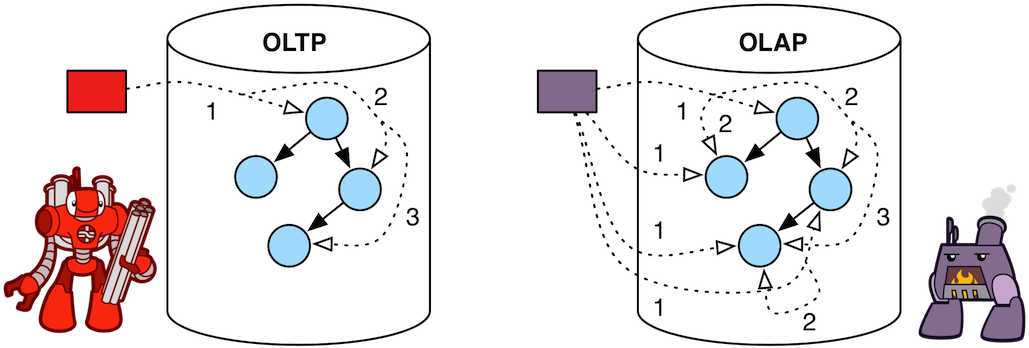

TinkerPop is a framework composed of various interoperable components. At the foundation there is the core TinkerPop3 API which defines what a Graph, Vertex, Edge, etc. are. At minimum a vendor must implement the core API. Once implemented, the Gremlin traversal language is available to the vendor’s users. However, the vendor can go further and provide specific TraversalStrategy optimizations that allow the vendor to inspect a Gremlin query at runtime and optimize it for their particular implementation (e.g. index lookups, step reordering). If the vendor’s graph system is a graph processor (i.e. provides OLAP capabilities), the vendor can implement the GraphComputer API. This API defines how messages/traversers are passed between communicating workers (i.e. threads and/or machines). Once implemented, the same Gremlin traversals execute against both the graph database (OLTP) and the graph processor (OLAP). Note that the Gremlin language interprets the graph in terms of vertices and edges — i.e. Gremlin is a graph-based domain specific language. Users can create their own domain specific languages to process the graph in terms of higher-order constructs such as people, companies, and their various relationships. Finally, Gremlin Server can be leveraged to allow over the wire communication with the TinkerPop-enabled graph system. Gremlin Server provides a configurable communication interface along with metrics and monitoring capabilities. In total, this is The TinkerPop.

The Graph

Features

A Feature implementation describes the capabilities of a Graph instance. This interface is implemented by vendors for two purposes:

-

It tells users the capabilities of their

Graphinstance. -

It allows the features they do comply with to be tested against the Gremlin Test Suite - tests that do not comply are "ignored").

The following example in the Gremlin Console shows how to print all the features of a Graph:

gremlin> graph = TinkerGraph.open()

==>tinkergraph[vertices:0 edges:0]

gremlin> graph.features()

==>FEATURES

> GraphFeatures

>-- Persistence: false

>-- ThreadedTransactions: false

>-- Transactions: false

>-- Computer: true

> VariableFeatures

>-- Variables: true

>-- BooleanValues: true

>-- ByteValues: true

>-- DoubleValues: true

>-- FloatValues: true

>-- IntegerValues: true

>-- LongValues: true

>-- MapValues: true

>-- MixedListValues: true

>-- SerializableValues: true

>-- StringValues: true

>-- UniformListValues: true

>-- BooleanArrayValues: true

>-- ByteArrayValues: true

>-- DoubleArrayValues: true

>-- FloatArrayValues: true

>-- IntegerArrayValues: true

>-- LongArrayValues: true

>-- StringArrayValues: true

> VertexFeatures

>-- AddVertices: true

>-- RemoveVertices: true

>-- MetaProperties: true

>-- MultiProperties: true

>-- AddProperty: true

>-- RemoveProperty: true

>-- NumericIds: true

>-- StringIds: true

>-- UuidIds: true

>-- CustomIds: false

>-- AnyIds: true

>-- UserSuppliedIds: true

> VertexPropertyFeatures

>-- AddProperty: true

>-- RemoveProperty: true

>-- NumericIds: true

>-- StringIds: true

>-- UuidIds: true

>-- CustomIds: false

>-- AnyIds: true

>-- UserSuppliedIds: true

>-- Properties: true

>-- BooleanValues: true

>-- ByteValues: true

>-- DoubleValues: true

>-- FloatValues: true

>-- IntegerValues: true

>-- LongValues: true

>-- MapValues: true

>-- MixedListValues: true

>-- SerializableValues: true

>-- StringValues: true

>-- UniformListValues: true

>-- BooleanArrayValues: true

>-- ByteArrayValues: true

>-- DoubleArrayValues: true

>-- FloatArrayValues: true

>-- IntegerArrayValues: true

>-- LongArrayValues: true

>-- StringArrayValues: true

> EdgeFeatures

>-- AddEdges: true

>-- RemoveEdges: true

>-- AddProperty: true

>-- RemoveProperty: true

>-- NumericIds: true

>-- StringIds: true

>-- UuidIds: true

>-- CustomIds: false

>-- AnyIds: true

>-- UserSuppliedIds: true

> EdgePropertyFeatures

>-- Properties: true

>-- BooleanValues: true

>-- ByteValues: true

>-- DoubleValues: true

>-- FloatValues: true

>-- IntegerValues: true

>-- LongValues: true

>-- MapValues: true

>-- MixedListValues: true

>-- SerializableValues: true

>-- StringValues: true

>-- UniformListValues: true

>-- BooleanArrayValues: true

>-- ByteArrayValues: true

>-- DoubleArrayValues: true

>-- FloatArrayValues: true

>-- IntegerArrayValues: true

>-- LongArrayValues: true

>-- StringArrayValues: true

A common pattern for using features is to check their support prior to performing an operation:

gremlin> graph.features().graph().supportsTransactions()

==>false

gremlin> graph.features().graph().supportsTransactions() ? g.tx().commit() : "no tx"

==>no tx|

Tip

|

To ensure vendor agnostic code, always check feature support prior to usage of a particular function. In that way, the application can behave gracefully in case a particular implementation is provided at runtime that does not support a function being accessed. |

|

Warning

|

Assignments of a GraphStrategy can alter the base features of a Graph in dynamic ways, such that checks against a Feature may not always reflect the behavior exhibited when the GraphStrategy is in use.

|

Vertex Properties

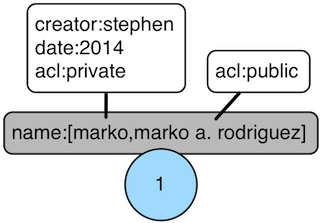

TinkerPop3 introduces the concept of a

TinkerPop3 introduces the concept of a VertexProperty<V>. All the properties of a Vertex are a VertexProperty. A VertexProperty implements Property and as such, it has a key/value pair. However, VertexProperty also implements Element and thus, can have a collection of key/value pairs. Moreover, while an Edge can only have one property of key "name" (for example), a Vertex can have multiple "name" properties. With the inclusion of vertex properties, two features are introduced which ultimately advance the graph modelers toolkit:

-

Multiple properties (multi-properties): a vertex property key can have multiple values (i.e. a vertex can have multiple "name" properties).

-

Properties on properties (meta-properties): a vertex property can have properties (i.e. a vertex property can have key/value data associated with it).

A collection of use cases are itemized below:

-

Permissions: Vertex properties can have key/value ACL-type permission information associated with them.

-

Auditing: When a vertex property is manipulated, it can have key/value information attached to it saying who the creator, deletor, etc. are.

-

Provenance: The "name" of a vertex can be declared by multiple users.

A running example using vertex properties is provided below to demonstrate and explain the API.

gremlin> graph = TinkerGraph.open()

==>tinkergraph[vertices:0 edges:0]

gremlin> g = graph.traversal(standard())

==>graphtraversalsource[tinkergraph[vertices:0 edges:0], standard]

gremlin> v = g.addV('name','marko','name','marko a. rodriguez').next()

==>v[0]

gremlin> g.V(v).properties().count()

==>2

gremlin> g.V(v).properties('name').count() //(1)

==>2

gremlin> g.V(v).properties()

==>vp[name->marko]

==>vp[name->marko a. rodriguez]

gremlin> g.V(v).properties('name')

==>vp[name->marko]

==>vp[name->marko a. rodriguez]

gremlin> g.V(v).properties('name').hasValue('marko')

==>vp[name->marko]

gremlin> g.V(v).properties('name').hasValue('marko').property('acl','private') / /(2)

==>vp[name->marko]

gremlin> g.V(v).properties('name').hasValue('marko a. rodriguez')

==>vp[name->marko a. rodriguez]

gremlin> g.V(v).properties('name').hasValue('marko a. rodriguez').property('acl' ,'public')

==>vp[name->marko a. rodriguez]

gremlin> g.V(v).properties('name').has('acl','public').value()

==>marko a. rodriguez

gremlin> g.V(v).properties('name').has('acl','public').drop() //(3)

gremlin> g.V(v).properties('name').has('acl','public').value()

gremlin> g.V(v).properties('name').has('acl','private').value()

==>marko

gremlin> g.V(v).properties()

==>vp[name->marko]

gremlin> g.V(v).properties().properties() //(4)

==>p[acl->private]

gremlin> g.V(v).properties().property('date',2014) //(5)

==>vp[name->marko]

gremlin> g.V(v).properties().property('creator','stephen')

==>vp[name->marko]

gremlin> g.V(v).properties().properties()

==>p[date->2014]

==>p[creator->stephen]

==>p[acl->private]

gremlin> g.V(v).properties('name').valueMap()

==>[date:2014, creator:stephen, acl:private]

gremlin> g.V(v).property('name','okram') //(6)

==>v[0]

gremlin> g.V(v).properties('name')

==>vp[name->okram]

gremlin> g.V(v).values('name') //(7)

==>okram-

A vertex can have zero or more properties with the same key associated with it.

-

A vertex property can have standard key/value properties attached to it.

-

Vertex property removal is identical to property removal.

-

It is property to get the properties of a vertex property.

-

A vertex property can have any number of key/value properties attached to it.

-

property(...)will remove all existing key’d properties before adding the new single property (seeVertexProperty.Cardinality). -

If only the value of a property is needed, then

values()can be used.

If the concept of vertex properties is difficult to grasp, then it may be best to think of vertex properties in terms of "literal vertices." A vertex can have an edge to a "literal vertex" that has a single value key/value — e.g. "value=okram." The edge that points to that literal vertex has an edge-label of "name." The properties on the edge represent the literal vertex’s properties. The "literal vertex" can not have any other edges to it (only one from the associated vertex).

|

Tip

|

A toy graph demonstrating all of the new TinkerPop3 graph structure features is available at TinkerFactory.createTheCrew() and data/tinkerpop-crew*. This graph demonstrates multi-properties and meta-properties.

|

gremlin> g.V().as('a').

properties('location').as('b').

hasNot('endTime').as('c').

select('a','b','c').by('name').by(value).by('startTime') // deter mine the current location of each person

==>[a:marko, b:santa fe, c:2005]

==>[a:stephen, b:purcellville, c:2006]

==>[a:matthias, b:seattle, c:2014]

==>[a:daniel, b:aachen, c:2009]

gremlin> g.V().has('name','gremlin').inE('uses').

order().by('skill',incr).as('a').

outV().as('b').

select('a','b').by('skill').by('name') // rank the users of greml in by their skill level

==>[a:3, b:matthias]

==>[a:4, b:marko]

==>[a:5, b:stephen]

==>[a:5, b:daniel]Graph Variables

TinkerPop3 introduces the concept of Graph.Variables. Variables are key/value pairs associated with the graph itself — in essence, a Map<String,Object>. These variables are intended to store metadata about the graph. Example use cases include:

-

Schema information: What do the namespace prefixes resolve to and when was the schema last modified?

-

Global permissions: What are the access rights for particular groups?

-

System user information: Who are the admins of the system?

An example of graph variables in use is presented below:

gremlin> graph = TinkerGraph.open()

==>tinkergraph[vertices:0 edges:0]

gremlin> graph.variables()

==>variables[size:0]

gremlin> graph.variables().set('systemAdmins',['stephen','peter','pavel'])

==>null

gremlin> graph.variables().set('systemUsers',['matthias','marko','josh'])

==>null

gremlin> graph.variables().keys()

==>systemAdmins

==>systemUsers

gremlin> graph.variables().get('systemUsers')

==>Optional[[matthias, marko, josh]]

gremlin> graph.variables().get('systemUsers').get()

==>matthias

==>marko

==>josh

gremlin> graph.variables().remove('systemAdmins')

==>null

gremlin> graph.variables().keys()

==>systemUsers|

Important

|

Graph variables are not intended to be subject to heavy, concurrent mutation nor to be used in complex computations. The intention is to have a location to store data about the graph for administrative purposes. |

Graph Transactions

A database transaction represents a unit of work to execute against the database. Transactions are controlled by an implementation of the

A database transaction represents a unit of work to execute against the database. Transactions are controlled by an implementation of the Transaction interface and that object can be obtained from the Graph interface using the tx() method. It is important to note that the Transaction object does not represent a "transaction" itself. It merely exposes the methods for working with transactions (e.g. committing, rolling back, etc).

Most Graph implementations that supportsTransactions will implement an "automatic" ThreadLocal transaction, which means that when a read or write occurs after the Graph is instantiated a transaction is automatically started within that thread. There is no need to manually call a method to "create" or "start" a transaction. Simple modify the graph as required and call graph.tx().commit() to apply changes or graph.tx().rollback() to undo them. When the next read or write action occurs against the graph, a new transaction will be started within that current thread of execution.

When using transactions in this fashion, especially in web application (e.g. REST server), it is important to ensure that transaction do not leak from one request to the next. In other words, unless a client is somehow bound via session to process every request on the same server thread, ever request must be committed or rolled back at the end of the request. By ensuring that the request encapsulates a transaction, it ensures that a future request processed on a server thread is starting in a fresh transactional state and will not have access to the remains of one from an earlier request. A good strategy is to rollback a transaction at the start of a request, so that if it so happens that a transactional leak does occur between requests somehow, a fresh transaction is assured by the fresh request.

|

Tip

|

The tx() method is on the Graph interface, but it is also available on the TraversalSource spawned from a Graph. Calls to TraversalSource.tx() are proxied through to the underlying Graph as a convenience.

|

Configuring

Determining when a transaction starts is dependent upon the behavior assigned to the Transaction. It is up to the Graph implementation to determine the default behavior and unless the implementation doesn’t allow it, the behavior itself can be altered via these Transaction methods:

public Transaction onReadWrite(final Consumer<Transaction> consumer);

public Transaction onClose(final Consumer<Transaction> consumer);Providing a Consumer function to onReadWrite allows definition of how a transaction starts when a read or a write occurs. Transaction.READ_WRITE_BEHAVIOR contains pre-defined Consumer functions to supply to the onReadWrite method. It has two options:

-

AUTO- automatic transactions where the transaction is started implicitly to the read or write operation -

MANUAL- manual transactions where it is up to the user to explicitly open a transaction, throwing an exception if the transaction is not open

Providing a Consumer function to onClose allows configuration of how a transaction is handled when Graph.close() is called. Transaction.CLOSE_BEHAVIOR has several pre-defined options that can be supplied to this method:

-

COMMIT- automatically commit an open transaction -

ROLLBACK- automatically rollback an open transaction -

MANUAL- throw an exception if a transaction is open, forcing the user to explicitly close the transaction

Once there is an understanding for how transactions are configured, most of the rest of the Transaction interface is self-explanatory. Note that Neo4j-Gremlin is used for the examples to follow as TinkerGraph does not support transactions.

gremlin> graph = Neo4jGraph.open('/tmp/neo4j')

==>neo4jgraph[EmbeddedGraphDatabase [/tmp/neo4j]]

gremlin> graph.features()

==>FEATURES

> GraphFeatures

>-- Transactions: true (1)

>-- Computer: false

>-- Persistence: true

...

gremlin> graph.tx().onReadWrite(Transaction.READ_WRITE_BEHAVIOR.AUTO) (2)

==>org.apache.tinkerpop.gremlin.neo4j.structure.Neo4jGraph$Neo4jTransaction@1c067c0d

gremlin> graph.addVertex("name","stephen") (3)

==>v[0]

gremlin> graph.tx().commit() (4)

==>null

gremlin> graph.tx().onReadWrite(Transaction.READ_WRITE_BEHAVIOR.MANUAL) (5)

==>org.apache.tinkerpop.gremlin.neo4j.structure.Neo4jGraph$Neo4jTransaction@1c067c0d

gremlin> graph.tx().isOpen()

==>false

gremlin> graph.addVertex("name","marko") (6)

Open a transaction before attempting to read/write the transaction

gremlin> graph.tx().open() (7)

==>null

gremlin> graph.addVertex("name","marko") (8)

==>v[1]

gremlin> graph.tx().commit()

==>null-

Check

featuresto ensure that the graph supports transactions. -

By default,

Neo4jGraphis configured with "automatic" transactions, so it is set here for demonstration purposes only. -

When the vertex is added, the transaction is automatically started. From this point, more mutations can be staged or other read operations executed in the context of that open transaction.

-

Calling

commitfinalizes the transaction. -

Change transaction behavior to require manual control.

-

Adding a vertex now results in failure because the transaction was not explicitly opened.

-

Explicitly open a transaction.

-

Adding a vertex now succeeds as the transaction was manually opened.

|

Note

|

It may be important to consult the documentation of the Graph implementation when it comes to the specifics of how transactions will behave. TinkerPop allows some latitude in this area and implementations may not have the exact same behaviors and ACID guarantees.

|

Retries

There are times when transactions fail. Failure may be indicative of some permanent condition, but other failures might simply require the transaction to be retried for possible future success. The Transaction object also exposes a method for executing automatic transaction retries:

gremlin> graph = Neo4jGraph.open('/tmp/neo4j')

==>neo4jgraph[org.neo4j.tinkerpop.api.impl.Neo4jGraphAPIImpl@b3ccc63]

gremlin> graph.tx().submit {it.addVertex("name","josh")}.retry(10)

==>v[0]

gremlin> graph.tx().submit {it.addVertex("name","daniel")}.exponentialBackoff(10 )

==>v[1]

gremlin> graph.close()

==>nullAs shown above, the submit method takes a Function<Graph, R> which is the unit of work to execute and possibly retry on failure. The method returns a Transaction.Workload object which has a number of default methods for common retry strategies. It is also possible to supply a custom retry function if a default one does not suit the required purpose.

Threaded Transactions

Most Graph implementations that support transactions do so in a ThreadLocal manner, where the current transaction is bound to the current thread of execution. Consider the following example to demonstrate:

graph.addVertex("name","stephen");

Thread t1 = new Thread(() -> {

graph.addVertex("name","josh");

});

Thread t2 = new Thread(() -> {

graph.addVertex("name","marko");

});

t1.start()

t2.start()

t1.join()

t2.join()

graph.tx().commit();The above code shows three vertices added to graph in three different threads: the current thread, t1 and t2. One might expect that by the time this body of code finished executing, that there would be three vertices persisted to the Graph. However, given the ThreadLocal nature of transactions, there really were three separate transactions created in that body of code (i.e. one for each thread of execution) and the only one committed was the first call to addVertex in the primary thread of execution. The other two calls to that method within t1 and t2 were never committed and thus orphaned.

A Graph that supportsThreadedTransactions is one that allows for a Graph to operate outside of that constraint, thus allowing multiple threads to operate within the same transaction. Therefore, if there was a need to have three different threads operating within the same transaction, the above code could be re-written as follows:

Graph threaded = graph.tx().newThreadedTx();

threaded.addVertex("name","stephen");

Thread t1 = new Thread(() -> {

threaded.addVertex("name","josh");

});

Thread t2 = new Thread(() -> {

threaded.addVertex("name","marko");

});

t1.start()

t2.start()

t1.join()

t2.join()

threaded.tx().commit();In the above case, the call to graph.tx().newThreadedTx() creates a new Graph instance that is unbound from the ThreadLocal transaction, thus allowing each thread to operate on it in the same context. In this case, there would be three separate vertices persisted to the Graph.

Gremlin I/O

The task of getting data in and out of

The task of getting data in and out of Graph instances is the job of the Gremlin I/O packages. Gremlin I/O provides two interfaces for reading and writing Graph instances: GraphReader and GraphWriter. These interfaces expose methods that support:

-

Reading and writing an entire

Graph -

Reading and writing a

Traversal<Vertex>as adjacency list format -

Reading and writing a single

Vertex(with and without associatedEdgeobjects) -

Reading and writing a single

Edge -

Reading and writing a single

VertexProperty -

Reading and writing a single

Property -

Reading and writing an arbitrary

Object

In all cases, these methods operate in the currency of InputStream and OutputStream objects, allowing graphs and their related elements to be written to and read from files, byte arrays, etc. The Graph interface offers the io method, which provides access to "reader/writer builder" objects that are pre-configured with serializers provided by the Graph, as well as helper methods for the various I/O capabilities. Unless there are very advanced requirements for the serialization process, it is always best to utilize the methods on the Io interface to construct GraphReader and GraphWriter instances, as the implementation may provide some custom settings that would otherwise have to be configured manually by the user to do the serialization.

It is up to the implementations of the GraphReader and GraphWriter interfaces to choose the methods they implement and the manner in which they work together. The only semantics enforced and expected is that the write methods should produce output that is compatible with the corresponding read method (e.g. the output of writeVertices should be readable as input to readVertices and the output of writeProperty should be readable as input to readProperty).

GraphML Reader/Writer

The GraphML file format is a common XML-based representation of a graph. It is widely supported by graph-related tools and libraries making it a solid interchange format for TinkerPop. In other words, if the intent is to work with graph data in conjunction with applications outside of TinkerPop, GraphML may be the best choice to do that. Common use cases might be:

The GraphML file format is a common XML-based representation of a graph. It is widely supported by graph-related tools and libraries making it a solid interchange format for TinkerPop. In other words, if the intent is to work with graph data in conjunction with applications outside of TinkerPop, GraphML may be the best choice to do that. Common use cases might be:

As GraphML is a specification for the serialization of an entire graph and not the individual elements of a graph, methods that support input and output of single vertices, edges, etc. are not supported.

|

Caution

|

GraphML is a "lossy" format in that it only supports primitive values for properties and does not have support for Graph variables. It will use toString to serialize property values outside of those primitives.

|

|

Caution

|

GraphML, as a specification, allows for <edge> and <node> elements to appear in any order. The GraphMLReader will support that, however, that capability comes with a limitation. TinkerPop does not allow the vertex label to be changed after the vertex has been created. Therefore, if an <edge> element comes before the <node> the label on the vertex will be ignored. It is thus better to order <node> elements in the GraphML to appear before all <edge> elements if vertex labels are important to the graph.

|

The following code shows how to write a Graph instance to file called tinkerpop-modern.xml and then how to read that file back into a different instance:

final Graph graph = TinkerFactory.createModern();

graph.io(IoCore.graphml()).writeGraph("tinkerpop-modern.xml");

final Graph newGraph = TinkerGraph.open();

newGraph.io(IoCore.graphml()).readGraph("tinkerpop-modern.xml");If a custom configuration is required, then have the Graph generate a GraphReader or GraphWriter "builder" instance:

final Graph graph = TinkerFactory.createModern();

try (final OutputStream os = new FileOutputStream("tinkerpop-modern.xml")) {

graph.io(IoCore.graphml()).writer().normalize(true).create().writeGraph(os, graph);

}

final Graph newGraph = TinkerGraph.open();

try (final InputStream stream = new FileInputStream("tinkerpop-modern.xml")) {

newGraph.io(IoCore.graphml()).reader().vertexIdKey("name").create().readGraph(stream, newGraph);

}GraphSON Reader/Writer

GraphSON is a JSON-based format extended from earlier versions of TinkerPop. It is important to note that TinkerPop3’s GraphSON is not backwards compatible with prior TinkerPop GraphSON versions. GraphSON has some support from graph-related application outside of TinkerPop, but it is generally best used in two cases:

GraphSON is a JSON-based format extended from earlier versions of TinkerPop. It is important to note that TinkerPop3’s GraphSON is not backwards compatible with prior TinkerPop GraphSON versions. GraphSON has some support from graph-related application outside of TinkerPop, but it is generally best used in two cases:

-

A text format of the graph or its elements is desired (e.g. debugging, usage in source control, etc.)

-

The graph or its elements need to be consumed by code that is not JVM-based (e.g. JavaScript, Python, .NET, etc.)

GraphSON supports all of the GraphReader and GraphWriter interface methods and can therefore read or write an entire Graph, vertices, arbitrary objects, etc. The following code shows how to write a Graph instance to file called tinkerpop-modern.json and then how to read that file back into a different instance:

final Graph graph = TinkerFactory.createModern();

graph.io(IoCore.graphson()).writeGraph("tinkerpop-modern.json");

final Graph newGraph = TinkerGraph.open();

newGraph.io(IoCore.graphson()).readGraph("tinkerpop-modern.json");If a custom configuration is required, then have the Graph generate a GraphReader or GraphWriter "builder" instance:

final Graph graph = TinkerFactory.createModern();

try (final OutputStream os = new FileOutputStream("tinkerpop-modern.json")) {

final GraphSONMapper mapper = graph.io(IoCore.graphson()).mapper().normalize(true).create()

graph.io(IoCore.graphson()).writer().mapper(mapper).create().writeGraph(os, graph)

}

final Graph newGraph = TinkerGraph.open();

try (final InputStream stream = new FileInputStream("tinkerpop-modern.json")) {

newGraph.io(IoCore.graphson()).reader().vertexIdKey("name").create().readGraph(stream, newGraph);

}One of the important configuration options of the GraphSONReader and GraphSONWriter is the ability to embed type information into the output. By embedding the types, it becomes possible to serialize a graph without losing type information that might be important when being consumed by another source. The importance of this concept is demonstrated in the following example where a single Vertex is written to GraphSON using the Gremlin Console:

gremlin> graph = TinkerFactory.createModern()

==>tinkergraph[vertices:6 edges:6]

gremlin> g = graph.traversal()

==>graphtraversalsource[tinkergraph[vertices:6 edges:6], standard]

gremlin> f = new FileOutputStream("vertex-1.json")

==>java.io.FileOutputStream@40aa96e4

gremlin> graph.io(graphson()).writer().create().writeVertex(f, g.V(1).next(), BO TH)

==>null

gremlin> f.close()

==>nullThe following GraphSON example shows the output of GraphSonWriter.writeVertex() with associated edges:

{

"id": 1,

"label": "person",

"outE": {

"created": [

{

"id": 9,

"inV": 3,

"properties": {

"weight": 0.4

}

}

],

"knows": [

{

"id": 7,

"inV": 2,

"properties": {

"weight": 0.5

}

},

{

"id": 8,

"inV": 4,

"properties": {

"weight": 1

}

}

]

},

"properties": {

"name": [

{

"id": 0,

"value": "marko"

}

],

"age": [

{

"id": 1,

"value": 29

}

]

}

}The vertex properly serializes to valid JSON but note that a consuming application will not automatically know how to interpret the numeric values. In coercing those Java values to JSON, such information is lost.

With a minor change to the construction of the GraphSONWriter the lossy nature of GraphSON can be avoided:

gremlin> graph = TinkerFactory.createModern()

==>tinkergraph[vertices:6 edges:6]

gremlin> g = graph.traversal()

==>graphtraversalsource[tinkergraph[vertices:6 edges:6], standard]

gremlin> f = new FileOutputStream("vertex-1.json")

==>java.io.FileOutputStream@213024c4

gremlin> mapper = graph.io(graphson()).mapper().embedTypes(true).create()

==>org.apache.tinkerpop.gremlin.structure.io.graphson.GraphSONMapper@45a501d9

gremlin> graph.io(graphson()).writer().mapper(mapper).create().writeVertex(f, g. V(1).next(), BOTH)

==>null

gremlin> f.close()

==>nullIn the above code, the embedTypes option is set to true and the output below shows the difference in the output:

{

"@class": "java.util.HashMap",

"id": 1,

"label": "person",

"outE": {

"@class": "java.util.HashMap",

"created": [

"java.util.ArrayList",

[

{

"@class": "java.util.HashMap",

"id": 9,

"inV": 3,

"properties": {

"@class": "java.util.HashMap",

"weight": 0.4

}

}

]

],

"knows": [

"java.util.ArrayList",

[

{

"@class": "java.util.HashMap",

"id": 7,

"inV": 2,

"properties": {

"@class": "java.util.HashMap",

"weight": 0.5

}

},

{

"@class": "java.util.HashMap",

"id": 8,

"inV": 4,

"properties": {

"@class": "java.util.HashMap",

"weight": 1

}

}

]

]

},

"properties": {

"@class": "java.util.HashMap",

"name": [

"java.util.ArrayList",

[

{

"@class": "java.util.HashMap",

"id": [

"java.lang.Long",

0

],

"value": "marko"

}

]

],

"age": [

"java.util.ArrayList",

[

{

"@class": "java.util.HashMap",

"id": [

"java.lang.Long",

1

],

"value": 29

}

]

]

}

}The ambiguity of components of the GraphSON is now removed by the @class property, which contains Java class information for the data it is associated with. The @class property is used for all non-final types, with the exception of a small number of "natural" types (String, Boolean, Integer, and Double) which can be correctly inferred from JSON typing. While the output is more verbose, it comes with the security of not losing type information. While non-JVM languages won’t be able to consume this information automatically, at least there is a hint as to how the values should be coerced back into the correct types in the target language.

Gryo Reader/Writer

Kryo is a popular serialization package for the JVM. Gremlin-Kryo is a binary

Kryo is a popular serialization package for the JVM. Gremlin-Kryo is a binary Graph serialization format for use on the JVM by JVM languages. It is designed to be space efficient, non-lossy and is promoted as the standard format to use when working with graph data inside of the TinkerPop stack. A list of common use cases is presented below:

-

Migration from one Gremlin Structure implementation to another (e.g.

TinkerGraphtoNeo4jGraph) -

Serialization of individual graph elements to be sent over the network to another JVM.

-

Backups of in-memory graphs or subgraphs.

|

Caution

|

When migrating between Gremlin Structure implementations, Kryo may not lose data, but it is important to consider the features of each Graph and whether or not the data types supported in one will be supported in the other. Failure to do so, may result in errors.

|

Kryo supports all of the GraphReader and GraphWriter interface methods and can therefore read or write an entire Graph, vertices, edges, etc. The following code shows how to write a Graph instance to file called tinkerpop-modern.kryo and then how to read that file back into a different instance:

final Graph graph = TinkerFactory.createModern();

graph.io(IoCore.gryo()).writeGraph("tinkerpop-modern.kryo");

final Graph newGraph = TinkerGraph.open();

newGraph.io(IoCore.gryo()).readGraph("tinkerpop-modern.kryo")'If a custom configuration is required, then have the Graph generate a GraphReader or GraphWriter "builder" instance:

final Graph graph = TinkerFactory.createModern();

try (final OutputStream os = new FileOutputStream("tinkerpop-modern.kryo")) {

graph.io(IoCore.gryo()).writer().create().writeGraph(os, graph);

}

final Graph newGraph = TinkerGraph.open();

try (final InputStream stream = new FileInputStream("tinkerpop-modern.kryo")) {

newGraph.io(IoCore.gryo()).reader().vertexIdKey("name").create().readGraph(stream, newGraph);

}|

Note

|

The preferred extension for files names produced by Gryo is .kryo.

|

TinkerPop2 Data Migration

For those using TinkerPop2, migrating to TinkerPop3 will mean a number of programming changes, but may also require a migration of the data depending on the graph implementation. For example, trying to open

For those using TinkerPop2, migrating to TinkerPop3 will mean a number of programming changes, but may also require a migration of the data depending on the graph implementation. For example, trying to open TinkerGraph data from TinkerPop2 with TinkerPop3 code will not work, however opening a TinkerPop2 Neo4jGraph with a TinkerPop3 Neo4jGraph should work provided there aren’t Neo4j version compatibility mismatches preventing the read.

If such a situation arises that a particular TinkerPop2 Graph can not be read by TinkerPop3, a "legacy" data migration approach exists. The migration involves writing the TinkerPop2 Graph to GraphSON, then reading it to TinkerPop3 with the LegacyGraphSONReader (a limited implementation of the GraphReader interface).

The following represents an example migration of the "classic" toy graph. In this example, the "classic" graph is saved to GraphSON using TinkerPop2.

gremlin> Gremlin.version()

==>2.5.z

gremlin> graph = TinkerGraphFactory.createTinkerGraph()

==>tinkergraph[vertices:6 edges:6]

gremlin> GraphSONWriter.outputGraph(graph,'/tmp/tp2.json',GraphSONMode.EXTENDED)

==>nullThe above console session uses the gremlin-groovy distribution from TinkerPop2. It is important to generate the tp2.json file using the EXTENDED mode as it will include data types when necessary which will help limit "lossiness" on the TinkerPop3 side when imported. Once tp2.json is created, it can then be imported to a TinkerPop3 Graph.

gremlin> Gremlin.version()

==>3.0.1-SNAPSHOT

gremlin> graph = TinkerGraph.open()

==>tinkergraph[vertices:0 edges:0]

gremlin> r = LegacyGraphSONReader.build().create()

==>org.apache.tinkerpop.gremlin.structure.io.graphson.LegacyGraphSONReader@64337702

gremlin> r.readGraph(new FileInputStream('/tmp/tp2.json'), graph)

==>null

gremlin> g = graph.traversal(standard())

==>graphtraversalsource[tinkergraph[vertices:6 edges:6], standard]

gremlin> g.E()

==>e[11][4-created->3]

==>e[12][6-created->3]

==>e[7][1-knows->2]

==>e[8][1-knows->4]

==>e[9][1-created->3]

==>e[10][4-created->5]Namespace Conventions

End users, vendors, GraphComputer algorithm designers, GremlinPlugin creators, etc. all leverage properties on elements to store information. There are a few conventions that should be respected when naming property keys to ensure that conflicts between these stakeholders do not conflict.

-

End users are granted the flat namespace (e.g.

name,age,location) to key their properties and label their elements. -

Vendors are granted the hidden namespace (e.g.

~metadata) to key their properties and labels. Data key’d as such is only accessible via the vendor implementation code and no other stakeholders are granted read nor write access to data prefixed with "~" (seeGraph.Hidden). Test coverage and exceptions exist to ensure that vendors respect this hard boundary. -

VertexProgramandMapReducedevelopers should, likeGraphStrategydevelopers, leverage qualified namespaces particular to their domain (e.g.mydomain.myvertexprogram.computedata). -

GremlinPlugincreators should prefix their plugin name with their domain (e.g.mydomain.myplugin).

|

Important

|

TinkerPop uses tinkerpop. and gremlin. as the prefixes for provided strategies, vertex programs, map reduce implementations, and plugins.

|

The only truly protected namespace is the hidden namespace provided to vendors. From there, its up to engineers to respect the namespacing conventions presented.

The Traversal

At the most general level there is Traversal<S,E> which implements Iterator<E>, where the S stands for start and the E stands for end. A traversal is composed of four primary components:

-

Step<S,E>: an individual function applied toSto yieldE. Steps are chained within a traversal. -

TraversalStrategy: interceptor methods to alter the execution of the traversal (e.g. query re-writing). -

TraversalSideEffects: key/value pairs that can be used to store global information about the traversal. -

Traverser<T>: the object propagating through theTraversalcurrently representing an object of typeT.

The classic notion of a graph traversal is provided by GraphTraversal<S,E> which extends Traversal<S,E>. GraphTraversal provides an interpretation of the graph data in terms of vertices, edges, etc. and thus, a graph traversal DSL.

|

Important

|

The underlying Step implementations provided by TinkerPop should encompass most of the functionality required by a DSL author. It is important that DSL authors leverage the provided steps as then the common optimization and decoration strategies can reason on the underlying traversal sequence. If new steps are introduced, then common traversal strategies may not function properly.

|

Graph Traversal Steps

A GraphTraversal<S,E> is spawned from a GraphTraversalSource. It can also be spawned anonymously (i.e. empty) via __. A graph traversal is composed of an ordered list of steps. All the steps provided by GraphTraversal inherit from the more general forms diagrammed above. A list of all the steps (and their descriptions) are provided in the TinkerPop3 GraphTraversal JavaDoc. The following subsections will demonstrate the GraphTraversal steps using the Gremlin Console.

|

Note

|

To reduce the verbosity of the expression, it is good to import static org.apache.tinkerpop.gremlin.process.traversal.dsl.graph.__.*. This way, instead of doing __.inE() for an anonymous traversal, it is possible to simply write inE(). Be aware of language-specific reserved keywords when using anonymous traversals. For example, in and as are reserved keywords in Groovy, therefore you must use the verbose syntax __.in() and __.as() to avoid collisions.

|

Lambda Steps

|

Caution

|

Lambda steps are presented for educational purposes as they represent the foundational constructs of the Gremlin language. In practice, lambda steps should be avoided and traversal verification strategies exist to disallow their use unless explicitly "turned off." For more information on the problems with lambdas, please read A Note on Lambdas. |

There are four generic steps by which all other specific steps described later extend.

| Step | Description |

|---|---|

|

map the traverser to some object of type |

|

map the traverser to an iterator of |

|

map the traverser to either true or false, where false will not pass the traverser to the next step. |

|

perform some operation on the traverser and pass it to the next step. |

|

split the traverser to all the traversals indexed by the |

The Traverser<S> object provides access to:

-

The current traversed

Sobject —Traverser.get(). -

The current path traversed by the traverser —

Traverser.path().-

A helper shorthand to get a particular path-history object —

Traverser.path(String) == Traverser.path().get(String).

-

-

The number of times the traverser has gone through the current loop —

Traverser.loops(). -

The number of objects represented by this traverser —

Traverser.bulk(). -

The local data structure associated with this traverser —

Traverser.sack(). -

The side-effects associated with the traversal —

Traverser.sideEffects().-

A helper shorthand to get a particular side-effect —

Traverser.sideEffect(String) == Traverser.sideEffects().get(String).

-

gremlin> g.V(1).out().values('name') //(1)

==>lop

==>vadas

==>josh

gremlin> g.V(1).out().map {it.get().value('name')} //(2)

==>lop

==>vadas

==>josh-

An outgoing traversal from vertex 1 to the name values of the adjacent vertices.

-

The same operation, but using a lambda to access the name property values.

gremlin> g.V().filter {it.get().label() == 'person'} //(1)

==>v[1]

==>v[2]

==>v[4]

==>v[6]

gremlin> g.V().hasLabel('person') //(2)

==>v[1]

==>v[2]

==>v[4]

==>v[6]-

A filter that only allows the vertex to pass if it has an age-property.

-

The more specific

has()-step is implemented as afilter()with respective predicate.

gremlin> g.V().hasLabel('person').sideEffect(System.out.&println) //(1)

v[1]

==>v[1]

v[2]

==>v[2]

v[4]

==>v[4]

v[6]

==>v[6]-

Whatever enters

sideEffect()is passed to the next step, but some intervening process can occur.

gremlin> g.V().branch(values('name')).

option('marko', values('age')).

option(none, values('name')) //(1)

==>29

==>vadas

==>lop

==>josh

==>ripple

==>peter

gremlin> g.V().choose(has('name','marko'),

values('age'),

values('name')) //(2)

==>29

==>vadas

==>lop

==>josh

==>ripple

==>peter-

If the vertex is "marko", get his age, else get the name of the vertex.

-

The more specific boolean-based

choose()-step is implemented as abranch().

AddEdge Step

Reasoning is the process of making explicit in the data what is implicit in the data. What is explicit in a graph are the objects of the graph — i.e. vertices and edges. What is implicit in the graph is the traversal. In other words, traversals expose meaning where the meaning is defined by the traversal description. For example, take the concept of a "co-developer." Two people are co-developers if they have worked on the same project together. This concept can be represented as a traversal and thus, the concept of "co-developers" can be derived. To add edges via a traversal, there is a collection of addE()-steps (map/sideEffect).

gremlin> g.V(1).as('a').out('created').in('created').where(neq('a')).addOutE('co -developer','a','year',2009) //(1)

==>e[12][4-co-developer->1]

==>e[13][6-co-developer->1]

gremlin> g.withSideEffect('a',g.V(3,5).toList()).V(4).addInE('createdBy','a') // (2)

==>e[14][3-createdBy->4]

==>e[15][5-createdBy->4]

gremlin> g.V().as('a').out('created').as('b').select('a','b').addOutE('b','creat edBy','a','acl','public') //(3)

==>e[16][3-createdBy->1]

==>e[17][5-createdBy->4]

==>e[18][3-createdBy->4]

==>e[19][3-createdBy->6]

gremlin> g.V(1).as('a').out('knows').addInE('livesNear','a','year',2009).inV().i nE('livesNear').values('year') //(4)

==>2009

==>2009-

Add a co-developer edge with a year-property between marko and his collaborators.

-

Add incoming createdBy edges from the josh-vertex to the lop- and ripple-vertices.

-

It is possible to pull the vertices from a select-projection.

-

The newly created edge is a traversable object.

AddVertex Step

The addV()-step is used to add vertices to the graph (map/sideEffect). For every incoming object, a vertex is created. Moreover, GraphTraversalSource maintains an addV() method.

gremlin> g.addV(label,'person','name','stephen')

==>v[12]

gremlin> g.V().values('name')

==>marko

==>vadas

==>lop

==>josh

==>ripple

==>peter

==>stephen

gremlin> g.V().outE('knows').addV('name','nothing')

==>v[14]

==>v[16]

gremlin> g.V().has('name','nothing')

==>v[16]

==>v[14]

gremlin> g.V().has('name','nothing').bothE()AddProperty Step

The property()-step is used to add properties to the elements of the graph (sideEffect). Unlike addV() and addE(), property() is a full sideEffect step in that it does not return the property it created, but the element that streamed into it.

gremlin> g.V(1).property('country','usa')

==>v[1]

gremlin> g.V(1).property('city','santa fe').property('state','new mexico').value Map()

==>[country:[usa], city:[santa fe], name:[marko], state:[new mexico], age:[29]]

gremlin> g.V(1).property(list,'age',35)

==>v[1]

gremlin> g.V(1).valueMap()

==>[country:[usa], city:[santa fe], name:[marko], state:[new mexico], age:[29, 35]]Aggregate Step

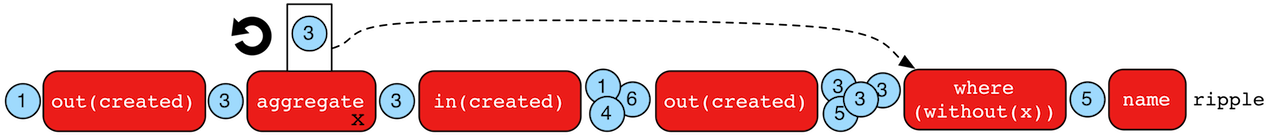

The aggregate()-step (sideEffect) is used to aggregate all the objects at a particular point of traversal into a Collection. The step uses eager evaluation in that no objects continue on until all previous objects have been fully aggregated (as opposed to store() which lazily fills a collection). The eager evaluation nature is crucial in situations where everything at a particular point is required for future computation. An example is provided below.

gremlin> g.V(1).out('created') //(1)

==>v[3]

gremlin> g.V(1).out('created').aggregate('x') //(2)

==>v[3]

gremlin> g.V(1).out('created').aggregate('x').in('created') //(3)

==>v[1]

==>v[4]

==>v[6]

gremlin> g.V(1).out('created').aggregate('x').in('created').out('created') //// (4)

==>v[3]

==>v[5]

==>v[3]

==>v[3]

gremlin> g.V(1).out('created').aggregate('x').in('created').out('created').

where(without('x')).values('name') //(5)

==>ripple-

What has marko created?

-

Aggregate all his creations.

-

Who are marko’s collaborators?

-

What have marko’s collaborators created?

-

What have marko’s collaborators created that he hasn’t created?

In recommendation systems, the above pattern is used:

"What has userA liked? Who else has liked those things? What have they liked that userA hasn't already liked?"

Finally, aggregate()-step can be modulated via by()-projection.

gremlin> g.V().out('knows').aggregate('x').cap('x')

==>{v[2]=1, v[4]=1}

gremlin> g.V().out('knows').aggregate('x').by('name').cap('x')

==>{vadas=1, josh=1}And Step

The and()-step ensures that all provided traversals yield a result (filter). Please see or() for or-semantics.

gremlin> g.V().and(

outE('knows'),

values('age').is(lt(30))).

values('name')

==>markoThe and()-step can take an arbitrary number of traversals. All traversals must produce at least one output for the original traverser to pass to the next step.

An infix notation can be used as well. Though, with infix notation, only two traversals can be and’d together.

gremlin> g.V().where(outE('created').and().outE('knows')).values('name')

==>markoAs Step

The as()-step is not a real step, but a "step modulator" similar to by() and option(). With as(), it is possible to provide a label to the step that can later be accessed by steps and data structures that make use of such labels — e.g., select(), match(), and path.

gremlin> g.V().as('a').out('created').as('b').select('a','b') //(1)

==>[a:v[1], b:v[3]]

==>[a:v[4], b:v[5]]

==>[a:v[4], b:v[3]]

==>[a:v[6], b:v[3]]

gremlin> g.V().as('a').out('created').as('b').select('a','b').by('name') //// <2 >

==>[a:marko, b:lop]

==>[a:josh, b:ripple]

==>[a:josh, b:lop]

==>[a:peter, b:lop]-

Select the objects labeled "a" and "b" from the path.

-

Select the objects labeled "a" and "b" from the path and, for each object, project its name value.

A step can have any number of labels associated with it. This is useful for referencing the same step multiple times in a future step.

gremlin> g.V().hasLabel('software').as('a','b','c').

select('a','b','c').

by('name').

by('lang').

by(__.in('created').values('name').fold())

==>[a:lop, b:java, c:[marko, josh, peter]]

==>[a:ripple, b:java, c:[josh]]Barrier Step

The barrier()-step (barrier) turns the the lazy traversal pipeline into a bulk-synchronous pipeline. This step is useful in the following situations:

-

When everything prior to

barrier()needs to be executed before moving onto the steps after thebarrier()(i.e. ordering). -

When "stalling" the traversal may lead to a "bulking optimization" in traversals that repeatedly touch many of the same elements (i.e. optimizing).

gremlin> g.V().sideEffect{println "first: ${it}"}.sideEffect{println "second: ${ it}"}.iterate()

first: v[1]

second: v[1]

first: v[2]

second: v[2]

first: v[3]

second: v[3]

first: v[4]

second: v[4]

first: v[5]

second: v[5]

first: v[6]

second: v[6]

gremlin> g.V().sideEffect{println "first: ${it}"}.barrier().sideEffect{println " second: ${it}"}.iterate()

first: v[1]

first: v[2]

first: v[3]

first: v[4]

first: v[5]

first: v[6]

second: v[1]

second: v[2]

second: v[3]

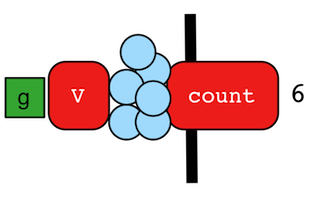

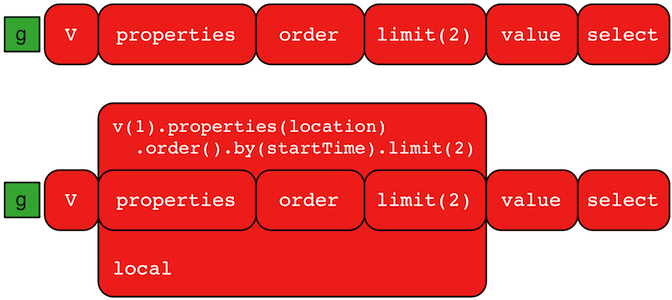

second: v[4]